Installation & Configuration

I successfully installed the DeepSeek-R1 model.

Initial Performance Testing

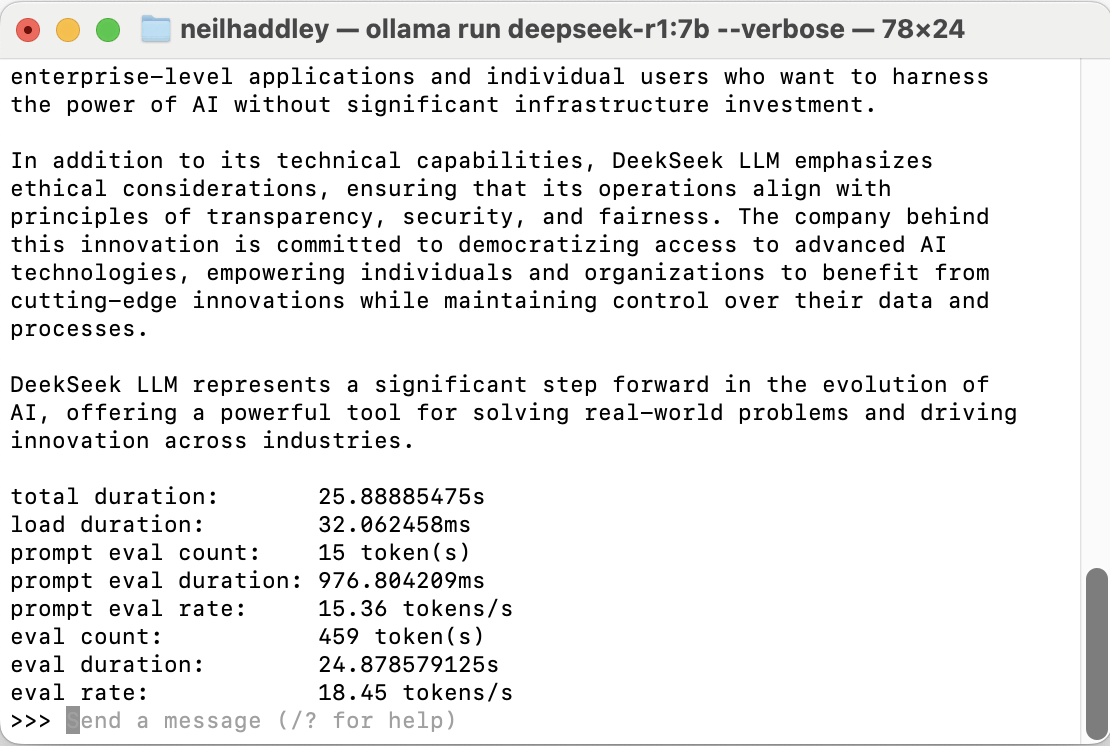

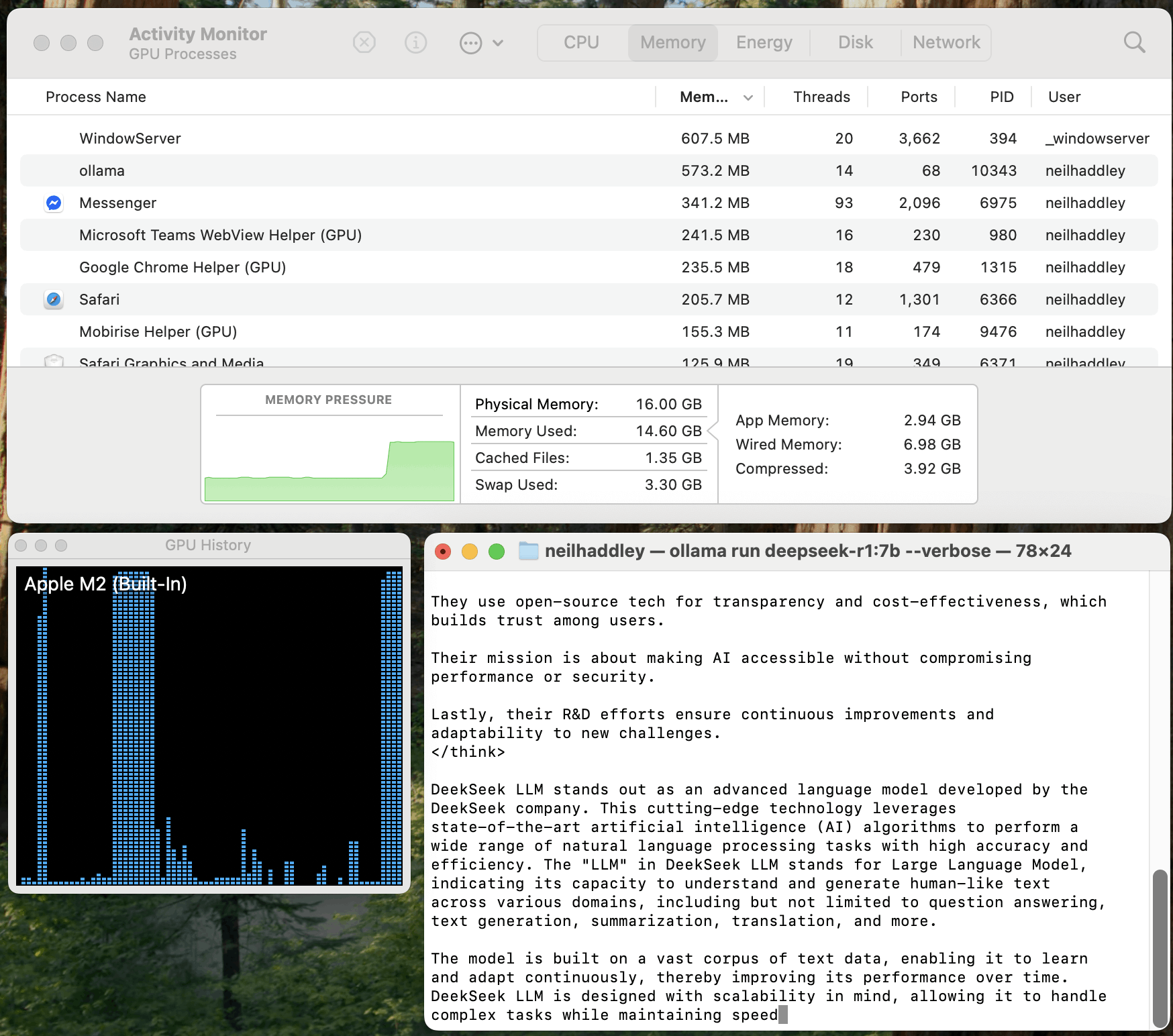

The 7 billion parameter variant generated output in approximately 25 seconds on my M2 MacBook Air with 16 GB of unified memory.

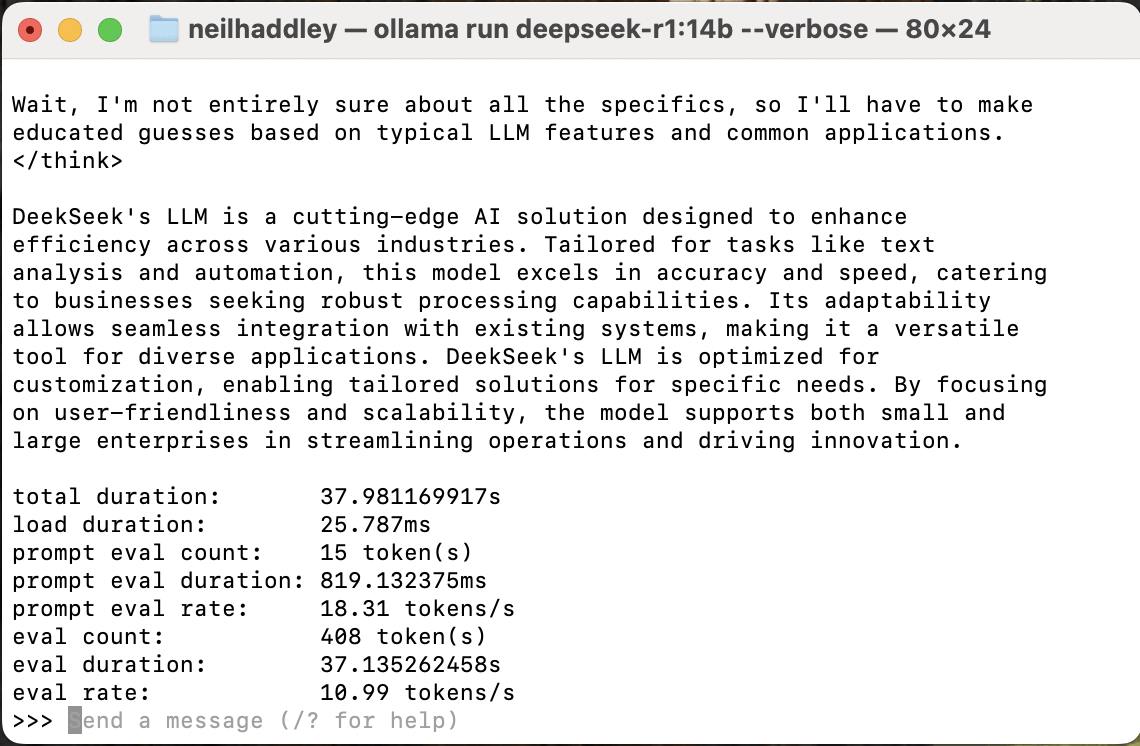

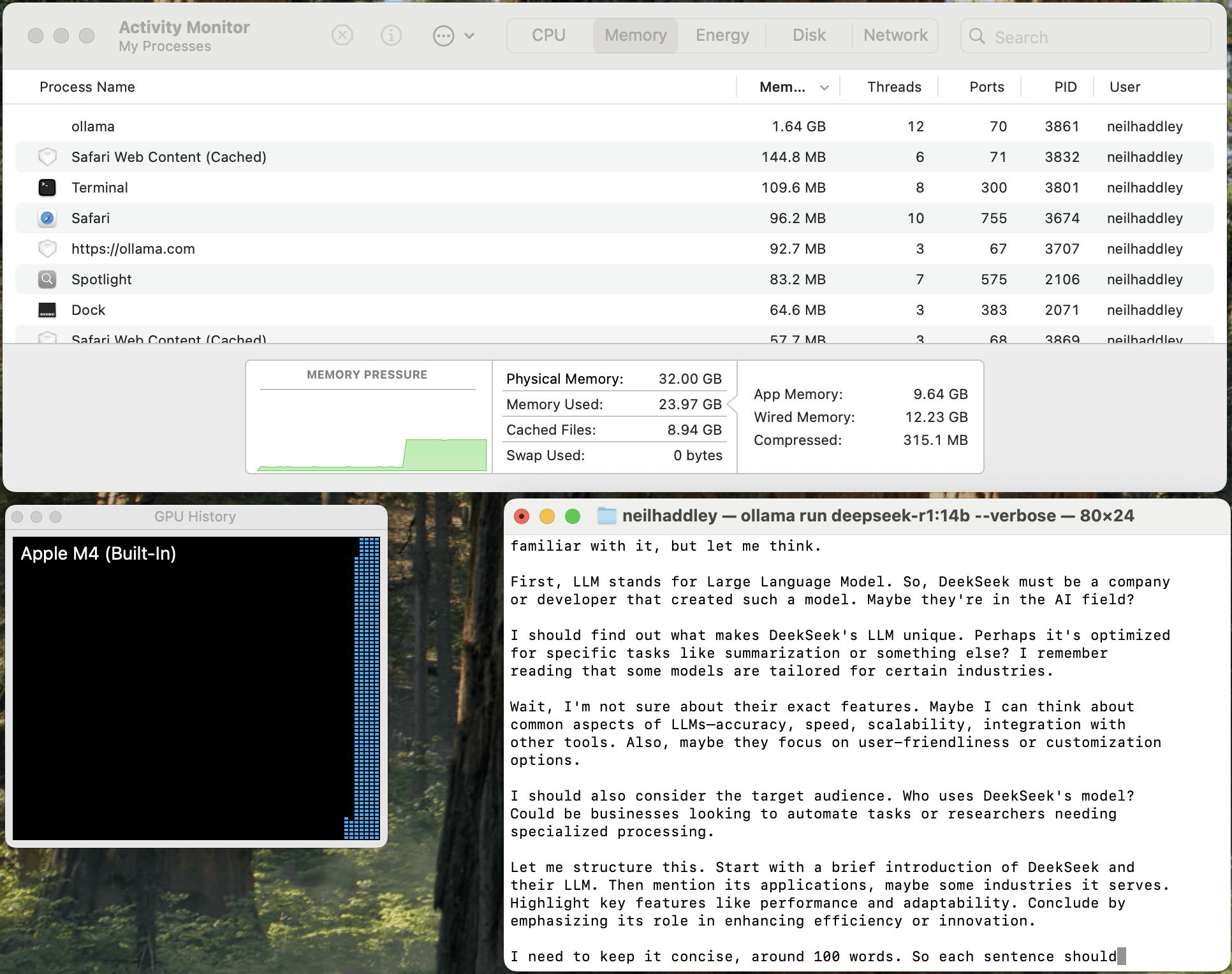

The 14 billion parameter variant generated output in approximately 40 seconds on my M4 MacBook Air with 32 GB of unified memory.

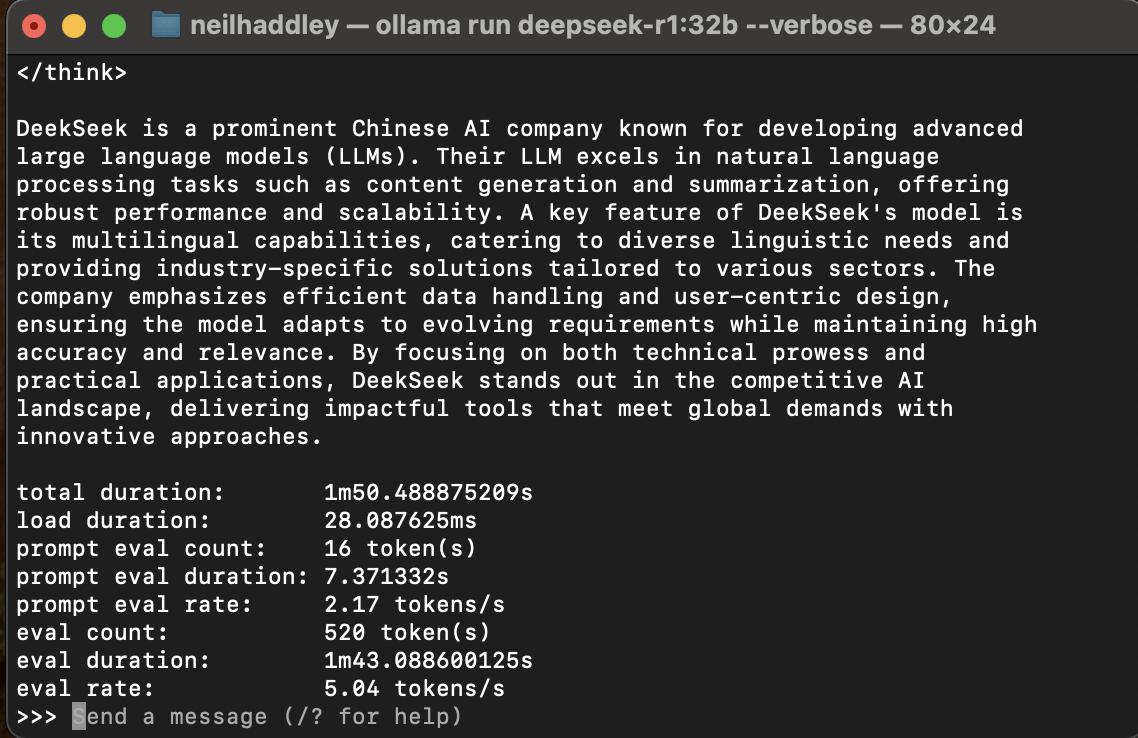

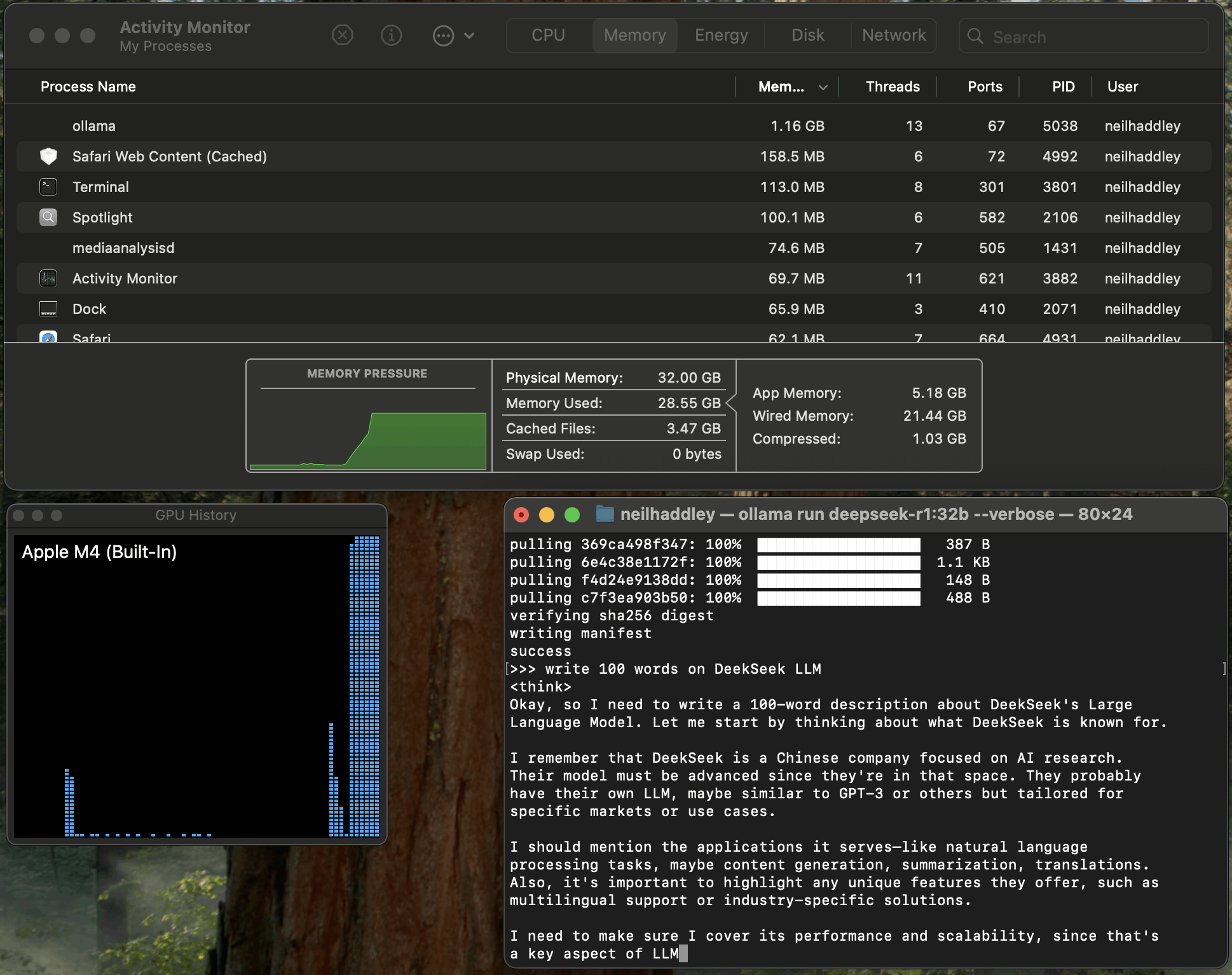

The 32 billion parameter variant generated output in approximately 1 minute and 50 seconds on my M4 MacBook Air with 32 GB of unified memory.

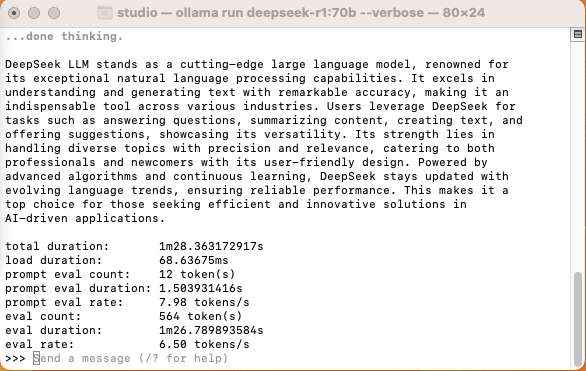

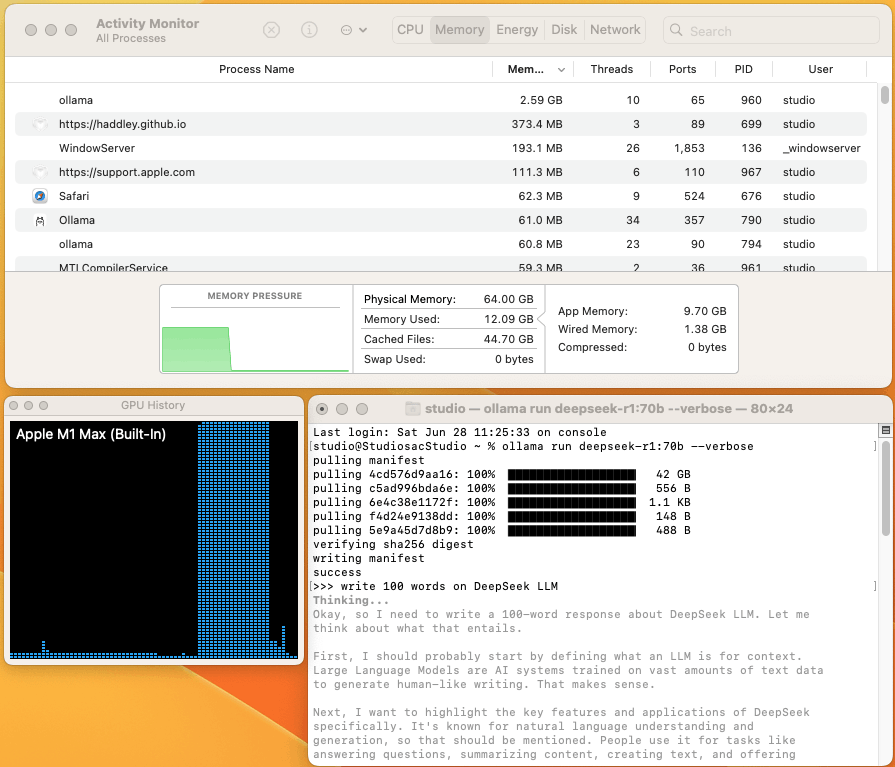

The 70 billion parameter variant generated output in approximately 1 minute 28 seconds on my M1 Max Mac Studio with 64 GB of unified memory.

https://ollama.com/download

I clicked Open

I clicked Move to Applications

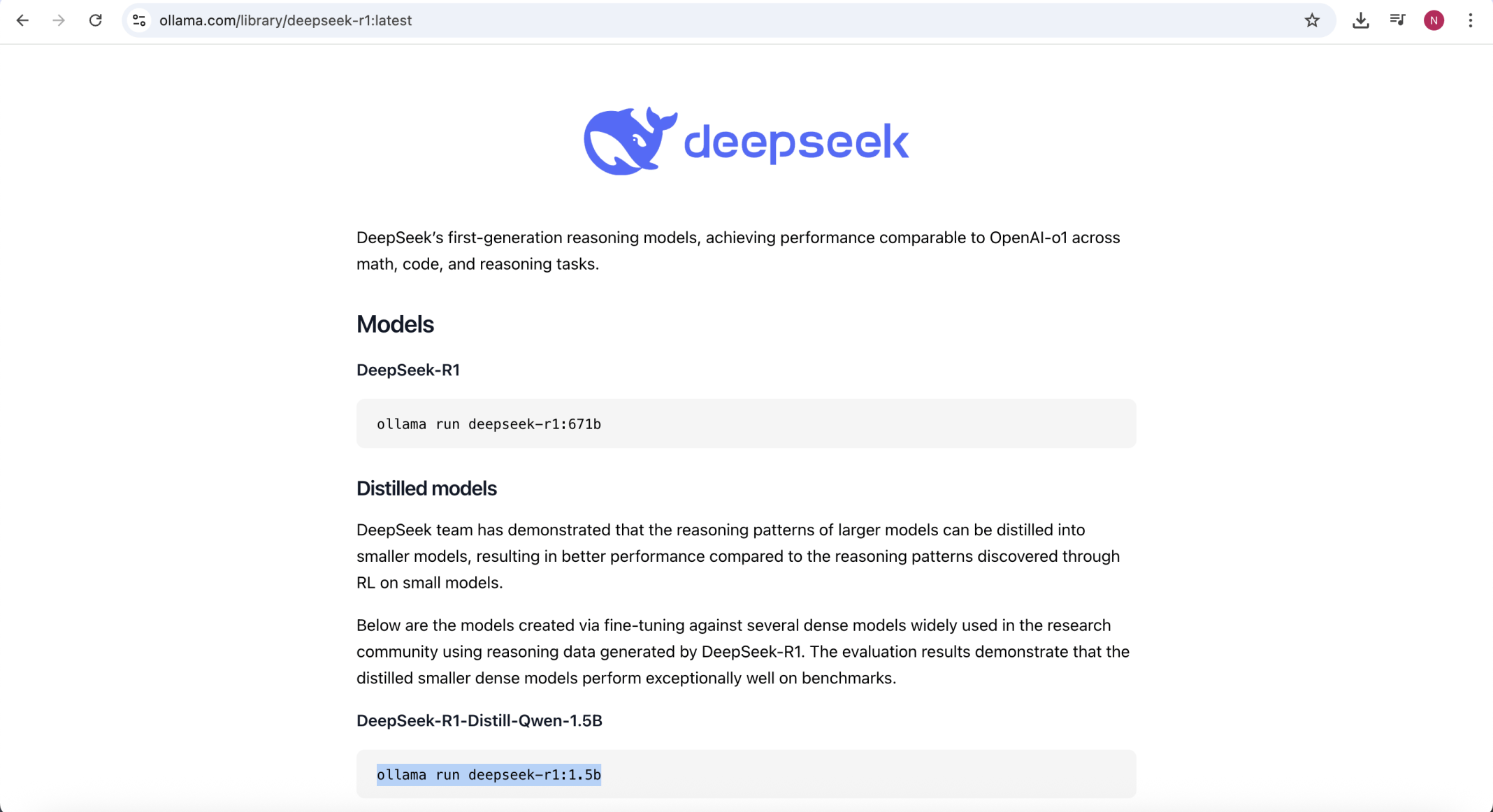

search for R1 model

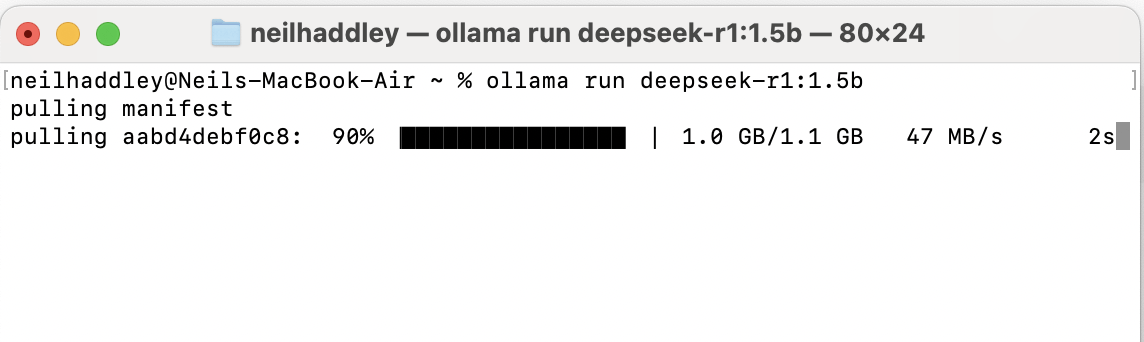

1.5B Model:

Embedded AI (IoT devices), high-volume/low-latency tasks (e.g., spam filtering, simple Q&A), or prototyping.

ollama run deepseek-r1:1.5b

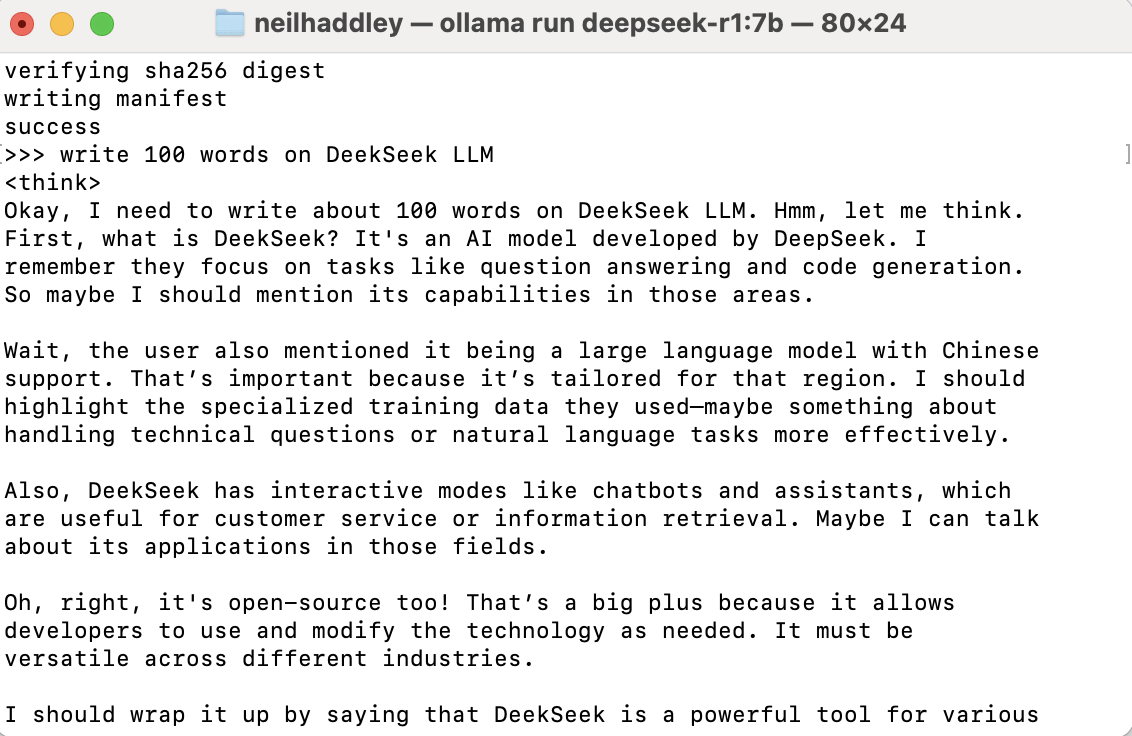

write 100 words on DeekSeek LLM

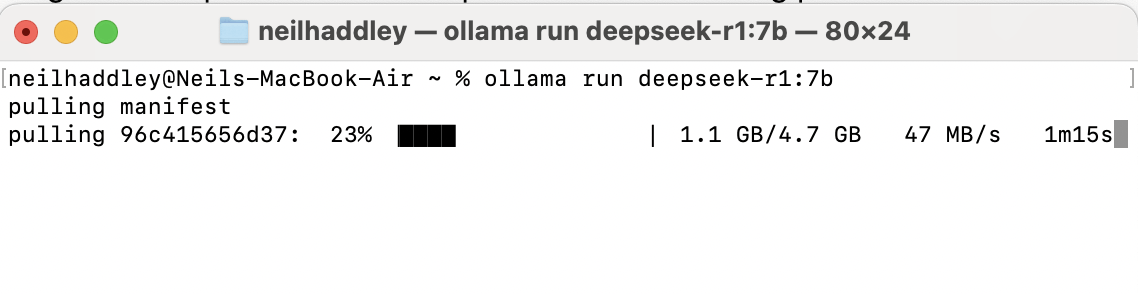

7B Model:

General-purpose chatbots, content moderation, mid-tier automation (e.g., customer support, basic data analysis).

ollama run deepseek-r1:7b

write 100 words on DeekSeek LLM

ollama run deepseek-r1:7b --verbose

M2 processor 16 GB of unified memory

14B Model:

Enterprise-grade applications (e.g., advanced chatbots, research tools, code assistants).

Scenarios requiring deep domain expertise or high accuracy (e.g., legal document analysis, financial forecasting).

ollama run deepseek-r1:14b --verbose

M4 processor 32 GB of unified memory

32B Model:

Ideal for applications prioritizing accuracy over speed, such as advanced research, data analysis, or generating long-form content.

ollama run deepseek-r1:32b --verbose

M4 processor 32 GB of unified memory

ollama run deepseek-r1:70b --verbose

total duration 1 minute 28 seconds

M1 Max processor 64 GB of unified memory

70B Model:

Designed for advanced reasoning, multilingual understanding, and long-context processing