Large Language Models (LLMs) generate responses in Markdown format for several key reasons, rooted in both their training and practical utility:

Training Data Influence:

LLMs are trained on vast datasets that include technical documentation, forums (e.g., GitHub, Stack Overflow), and web content where Markdown is prevalent. This exposure teaches them to recognize and replicate Markdown syntax for structuring information, such as lists, headers, or code blocks.

User Instructions:

Users often explicitly request formatted outputs (e.g., "Provide a bulleted list in Markdown"). LLMs adhere to these prompts, leveraging Markdown to organize responses with elements like # headers, - bullets, or triple backticks for code snippets.

Enhanced Readability and Structure:

Markdown helps LLMs present complex information clearly. For example:

Code snippets: Enclosed in ` to avoid syntax conflicts.

Bold/italics: Emphasize key terms (example).

Tables/Headers: Organize data systematically.

Platform Compatibility:

Many platforms (e.g., chatbots, note-taking apps) render Markdown, ensuring outputs display neatly. Even in plain text, Markdown remains legible (e.g., italics is intuitive).

Developer Design Choices:

Model creators might encourage Markdown for consistency, especially in technical contexts (e.g., generating API docs). This aligns with user expectations in developer communities.

Simplified Formatting:

Markdown is lightweight and easy to generate programmatically, making it ideal for LLMs to handle without complex rendering logic.

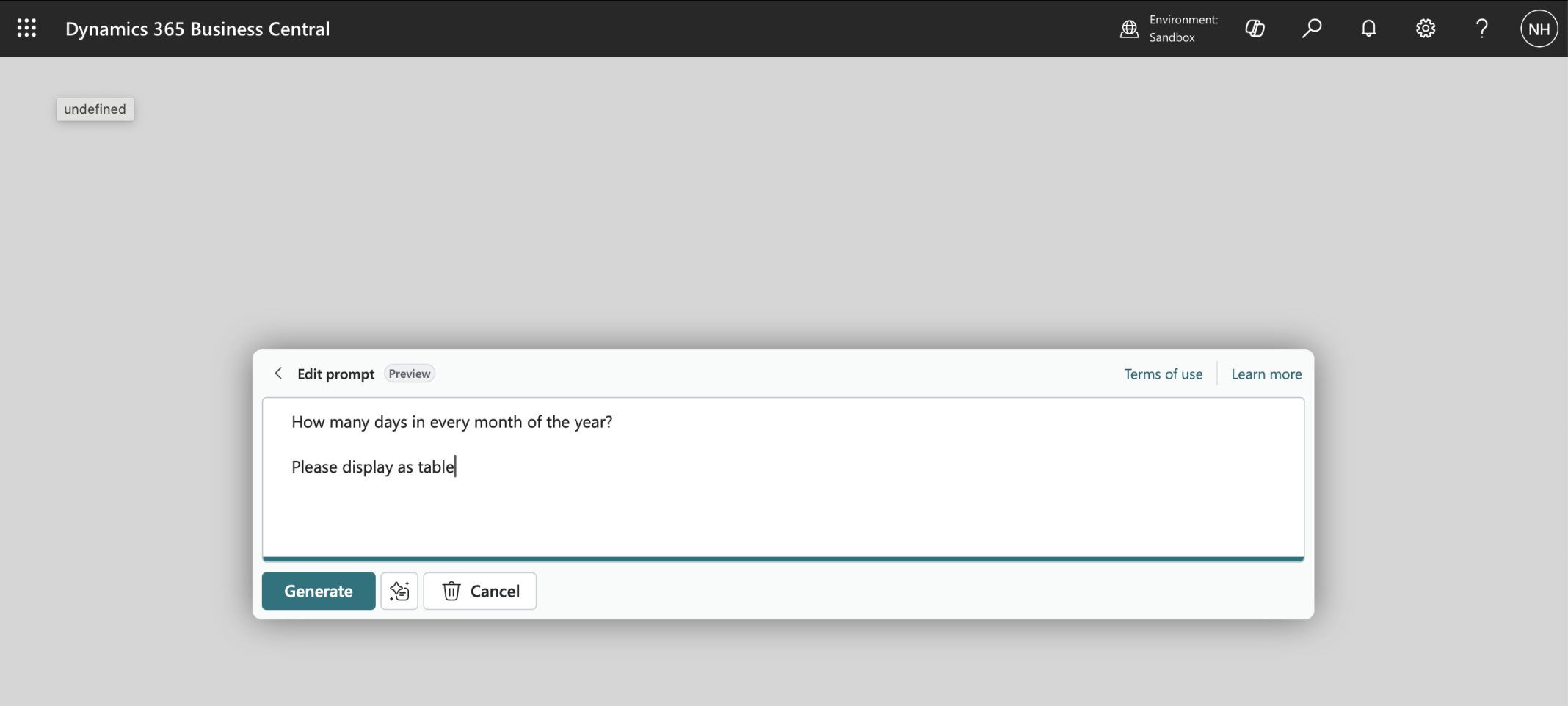

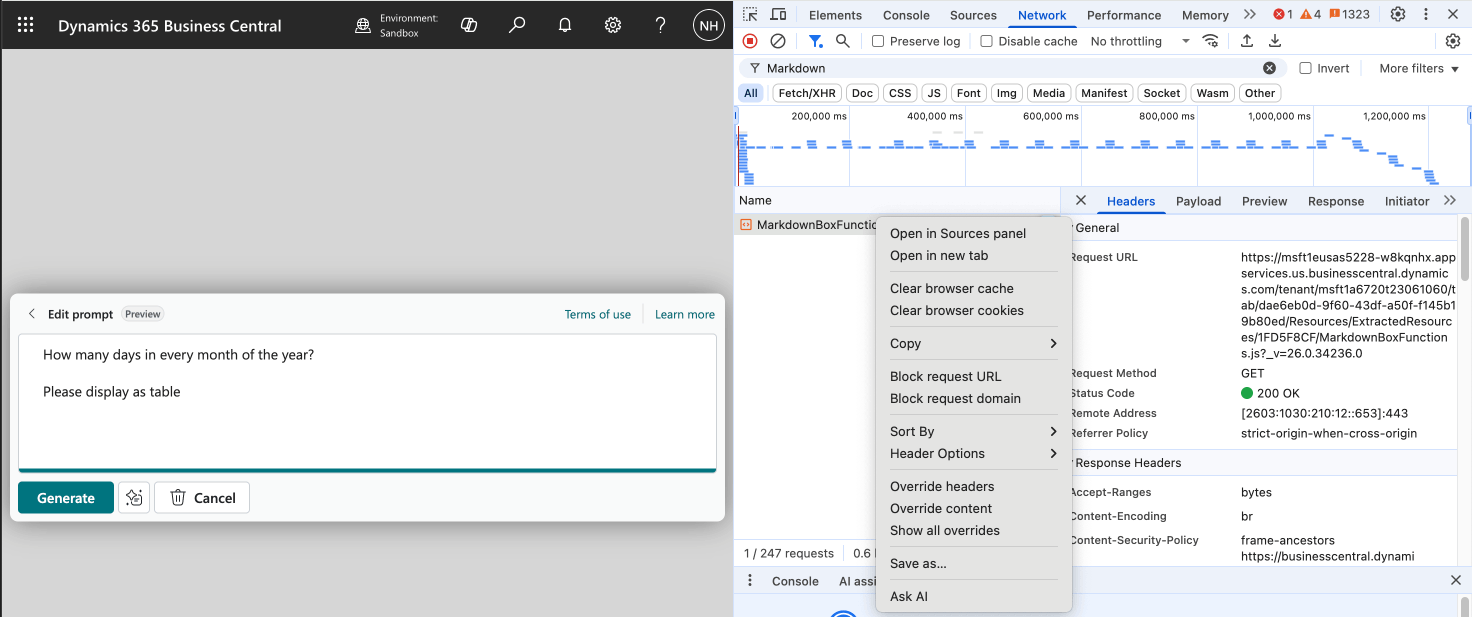

I provided a prompt that would return a Markdown formatted response

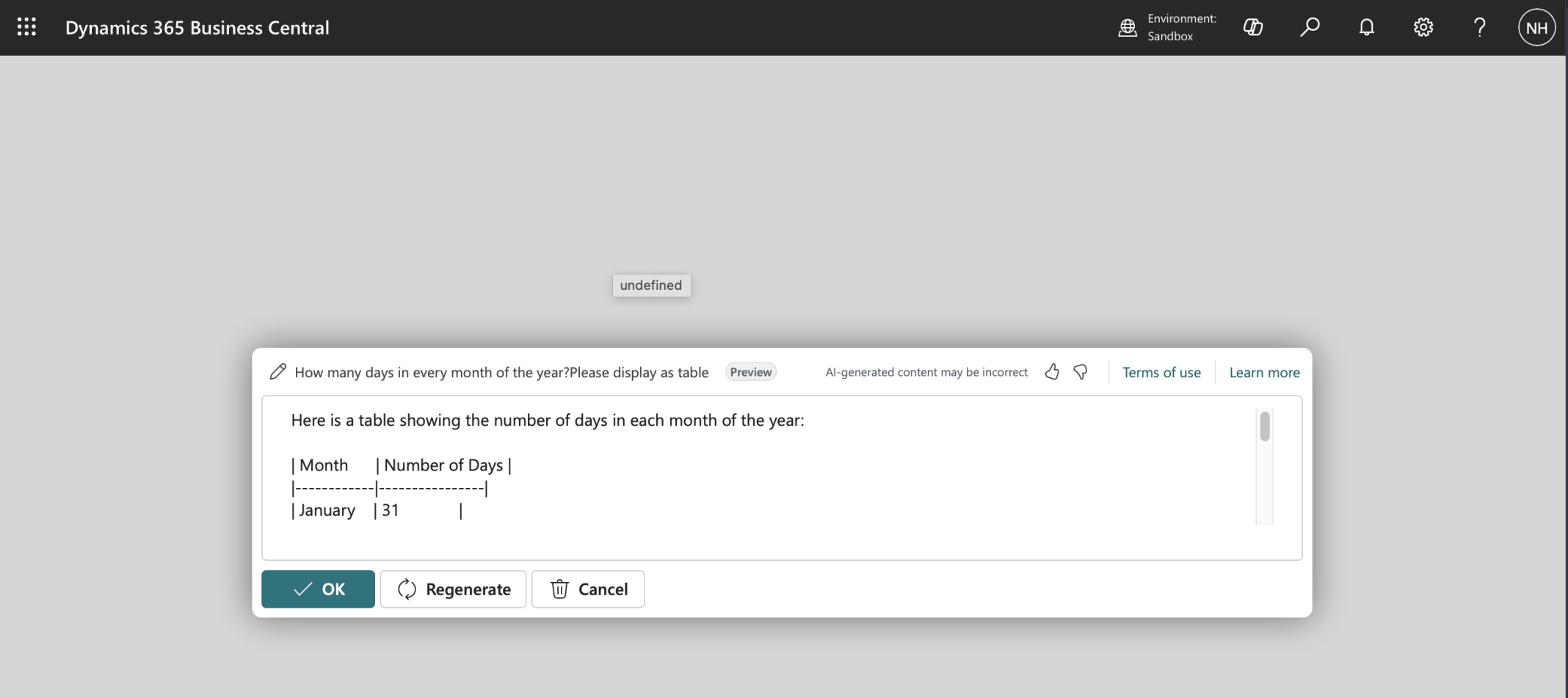

The Markdown formatted result was hard to read

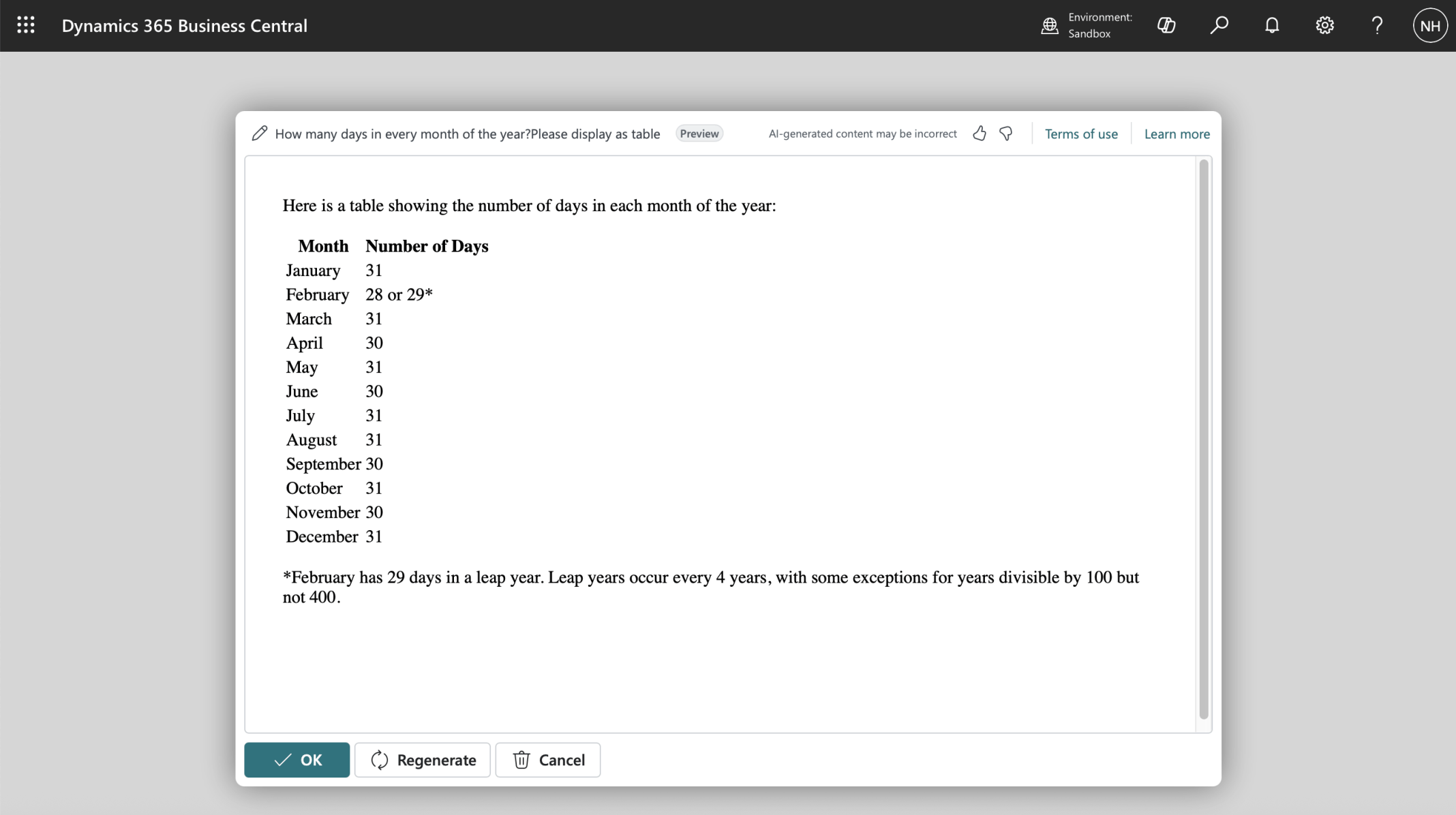

I added a controladdin to the PromptDialog

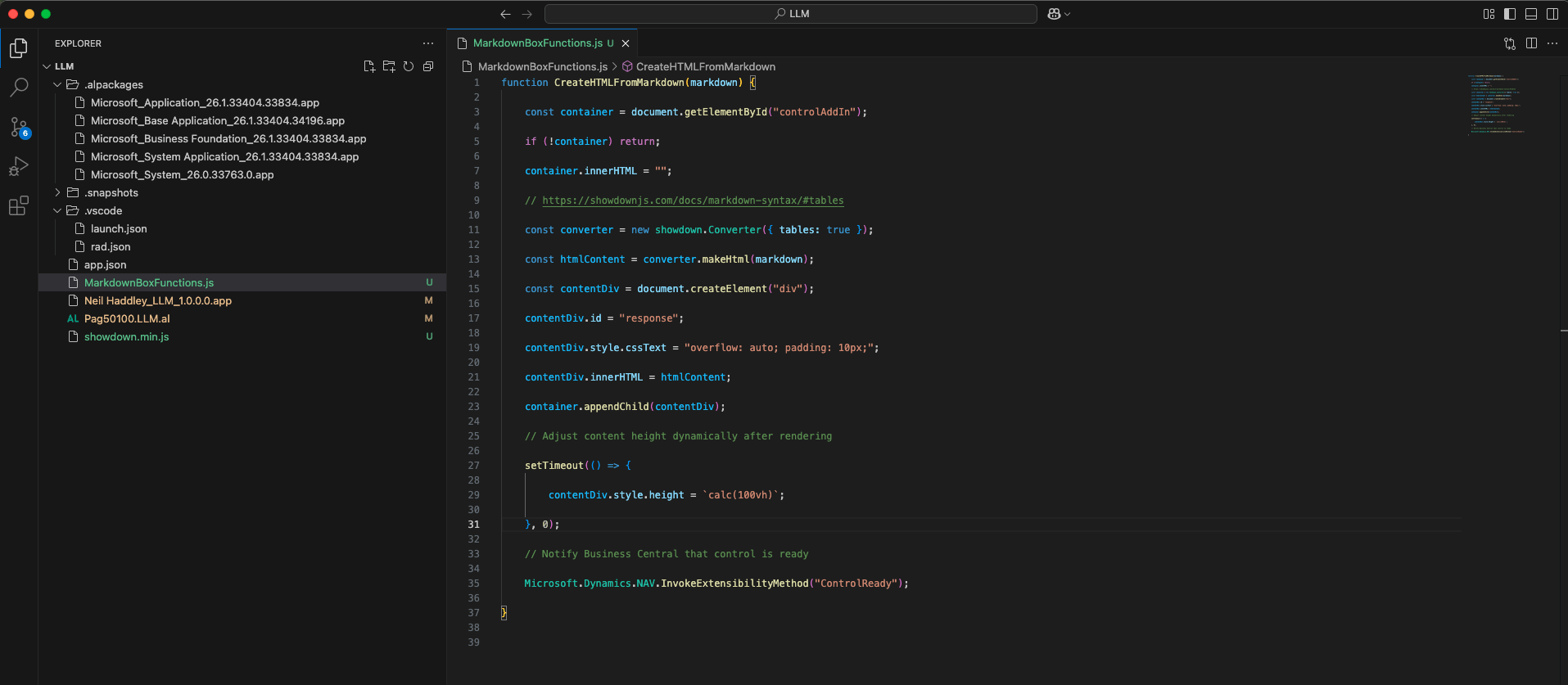

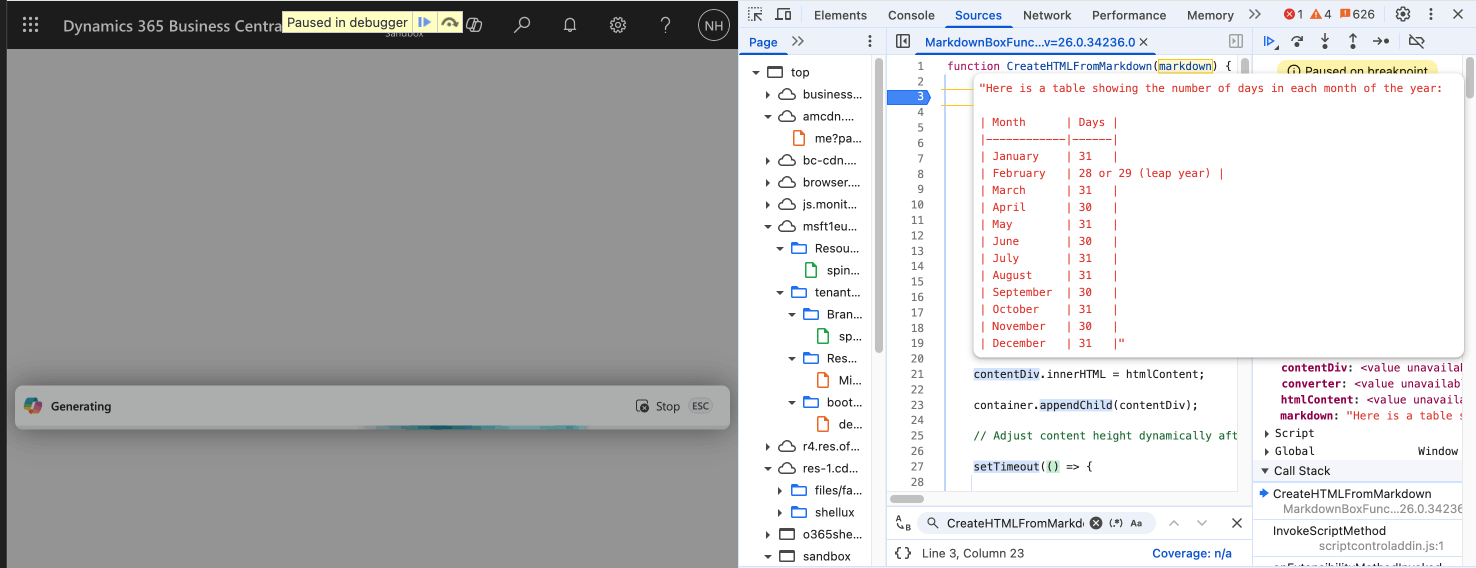

The CreateHTMLFromMarkdown JavaScript function is the key move

Open in Sources panel

Debugging the JavaScript

MarkdownBoxFunctions.js

TEXT

1function CreateHTMLFromMarkdown(markdown) { 2 3 const container = document.getElementById("controlAddIn"); 4 5 if (!container) return; 6 7 container.innerHTML = ""; 8 9 // https://showdownjs.com/docs/markdown-syntax/#tables 10 11 const converter = new showdown.Converter({ tables: true }); 12 13 const htmlContent = converter.makeHtml(markdown); 14 15 const contentDiv = document.createElement("div"); 16 17 contentDiv.id = "response"; 18 19 contentDiv.style.cssText = "overflow: auto; padding: 10px;"; 20 21 contentDiv.innerHTML = htmlContent; 22 23 container.appendChild(contentDiv); 24 25 // Adjust content height dynamically after rendering 26 27 setTimeout(() => { 28 29 contentDiv.style.height = `calc(100vh)`; 30 31 }, 0); 32 33 // Notify Business Central that control is ready 34 35 Microsoft.Dynamics.NAV.InvokeExtensibilityMethod("ControlReady"); 36 37}

Pag50100.LLM.al

TEXT

1namespace LLM.LLM; 2 3using System.AI; 4 5controladdin MarkdownBox 6{ 7 RequestedHeight = 500; 8 MaximumHeight = 700; 9 VerticalStretch = false; 10 VerticalShrink = false; 11 HorizontalStretch = true; 12 HorizontalShrink = true; 13 Scripts = 'showdown.min.js', 'MarkdownBoxFunctions.js'; 14 // ShowdownJS v 2.0 is released under the MIT license. Previous versions are released under BSD. https://github.com/showdownjs/showdown 15 16 event ControlReady() 17 procedure CreateHTMLFromMarkdown(markdown: Text); 18} 19 20enumextension 50100 LLM extends "Copilot Capability" 21{ 22 value(50100; "LLM") { } 23} 24 25page 50100 LLM 26{ 27 ApplicationArea = All; 28 Caption = 'LLM'; 29 PageType = PromptDialog; 30 Extensible = false; 31 DataCaptionExpression = Prompt; 32 IsPreview = true; 33 34 35 layout 36 { 37 #region input section 38 area(Prompt) 39 { 40 field(PromptField; Prompt) 41 { 42 ShowCaption = false; 43 MultiLine = true; 44 InstructionalText = 'Message Large Language Model'; 45 } 46 } 47 #endregion 48 49 #region output section 50 area(Content) 51 { 52 usercontrol(Formatted; MarkdownBox) 53 { 54 ApplicationArea = All; 55 } 56 57 /*field(OutputFiled; Output) 58 { 59 ShowCaption = false; 60 MultiLine = true; 61 }*/ 62 } 63 #endregion 64 } 65 actions 66 { 67 #region prompt guide 68 area(PromptGuide) 69 { 70 action("Capital City") 71 { 72 ApplicationArea = All; 73 Caption = 'Capital City'; 74 ToolTip = 'What is the Capital of ...'; 75 76 trigger OnAction() 77 begin 78 Prompt := 'What is the Capital of <Country>?'; 79 end; 80 } 81 } 82 #endregion 83 84 #region system actions 85 area(SystemActions) 86 { 87 systemaction(Generate) 88 { 89 Caption = 'Generate'; 90 ToolTip = 'Generate a response.'; 91 92 trigger OnAction() 93 begin 94 RunGeneration(); 95 CurrPage.Formatted.CreateHTMLFromMarkdown(Output); 96 end; 97 } 98 systemaction(OK) 99 { 100 Caption = 'OK'; 101 ToolTip = 'Save the result.'; 102 } 103 systemaction(Cancel) 104 { 105 Caption = 'Cancel'; 106 ToolTip = 'Discard the result.'; 107 } 108 systemaction(Regenerate) 109 { 110 Caption = 'Regenerate'; 111 ToolTip = 'Regenerate a response.'; 112 113 trigger OnAction() 114 begin 115 RunGeneration(); 116 CurrPage.Formatted.CreateHTMLFromMarkdown(Output); 117 end; 118 } 119 #endregion 120 } 121 } 122 var 123 Prompt: Text; 124 Output: Text; 125 126 127 local procedure RunGeneration() 128 var 129 CopilotCapability: Codeunit "Copilot Capability"; 130 AzureOpenAI: Codeunit "Azure OpenAI"; 131 AOAIDeployments: Codeunit "AOAI Deployments"; 132 AOAIChatCompletionParams: Codeunit "AOAI Chat Completion Params"; 133 AOAIChatMessages: Codeunit "AOAI Chat Messages"; 134 AOAIOperationResponse: Codeunit "AOAI Operation Response"; 135 UserMessage: TextBuilder; 136 begin 137 138 // CopilotCapability.UnregisterCapability(Enum::"Copilot Capability"::LLM); 139 140 if not CopilotCapability.IsCapabilityRegistered(Enum::"Copilot Capability"::LLM) then 141 CopilotCapability.RegisterCapability(Enum::"Copilot Capability"::LLM, 'https://about:none'); 142 143 AzureOpenAI.SetAuthorization(Enum::"AOAI Model Type"::"Chat Completions", GetEndpoint(), GetDeployment(), GetApiKey()); 144 AzureOpenAI.SetCopilotCapability(Enum::"Copilot Capability"::LLM); 145 146 147 AOAIChatCompletionParams.SetMaxTokens(2500); 148 AOAIChatCompletionParams.SetTemperature(0); 149 AOAIChatMessages.AddUserMessage(Prompt); 150 151 AzureOpenAI.GenerateChatCompletion(AOAIChatMessages, AOAIChatCompletionParams, AOAIOperationResponse); 152 if (AOAIOperationResponse.IsSuccess()) then 153 Output := AOAIChatMessages.GetLastMessage() 154 155 else 156 Output := ('Error: ' + AOAIOperationResponse.GetError()); 157 158 end; 159 160 161 local procedure GetEndpoint(): Text 162 var 163 begin 164 exit('https://haddley-ai.openai.azure.com'); 165 end; 166 167 local procedure GetDeployment(): Text 168 var 169 begin 170 exit('gpt-4o'); 171 end; 172 173 [NonDebuggable] 174 local procedure GetApiKey(): Text //SecretText 175 var 176 begin 177 exit('1234567890123456789012'); 178 end; 179}