I used Prompt flow, Semantic Kernel and Planner to create an AI application.

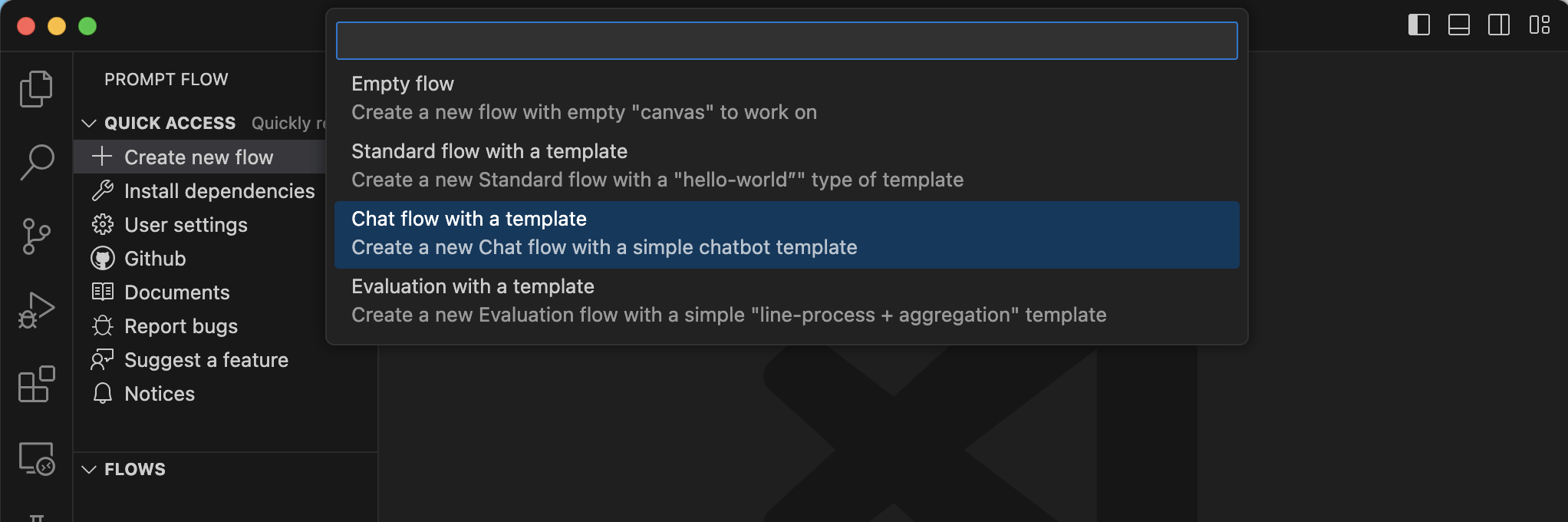

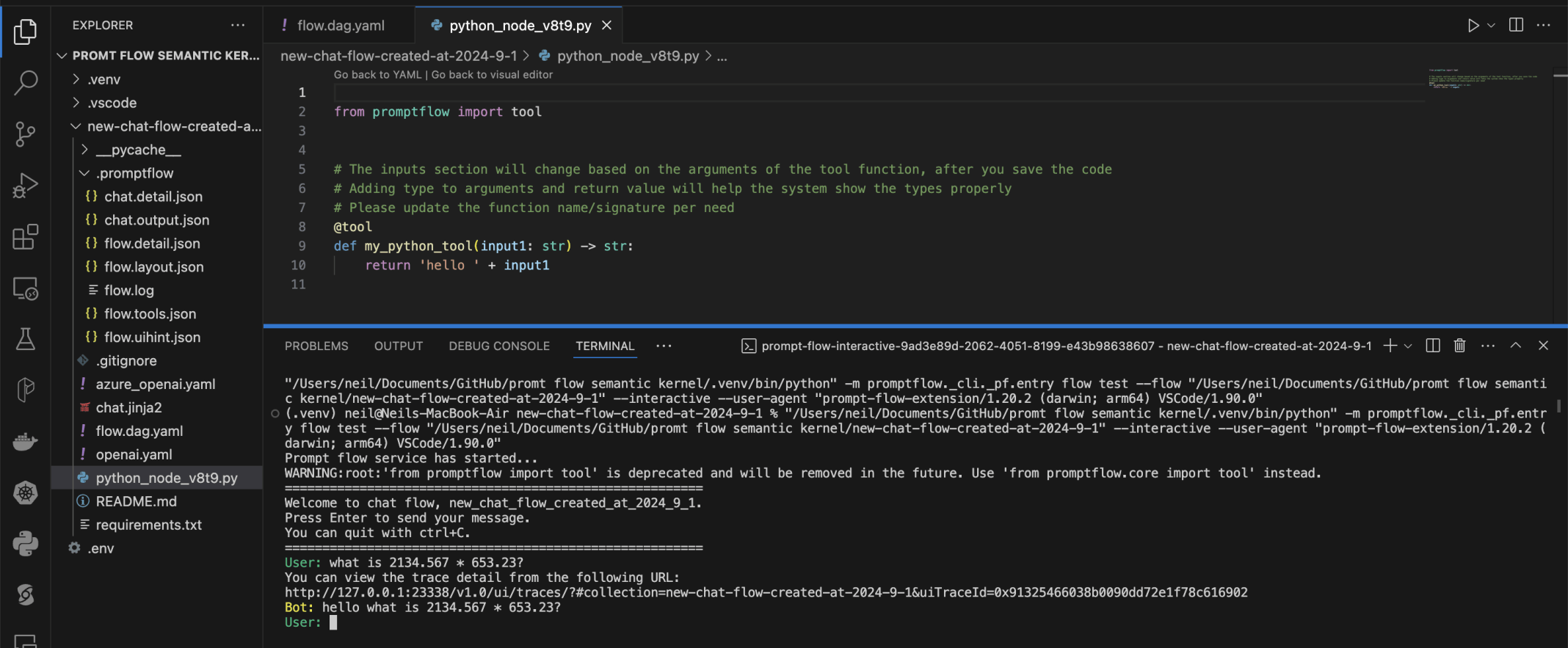

I used the Visual Studio Code Prompt flow Extension to create a Chat flow

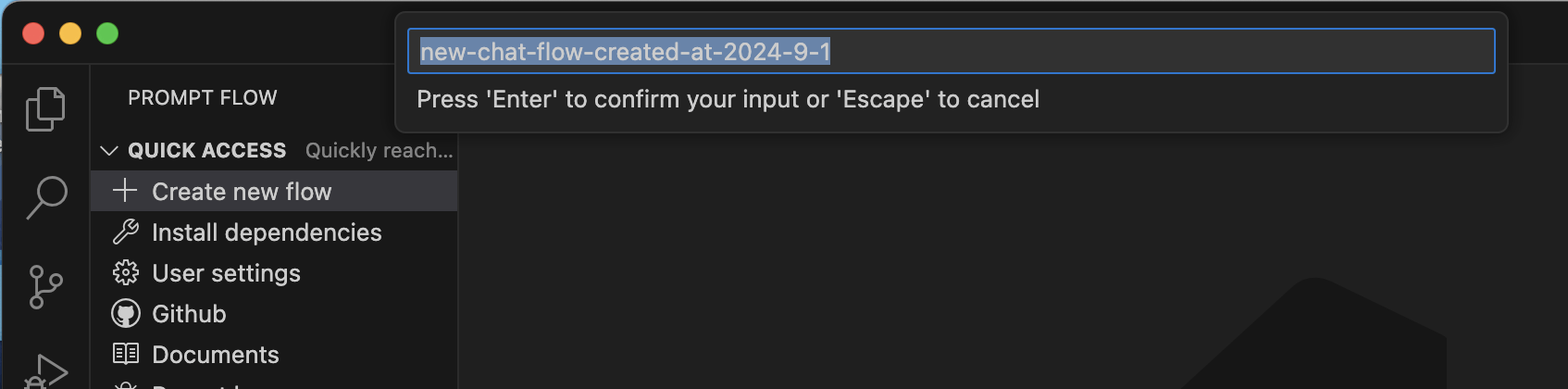

I accepted the default name

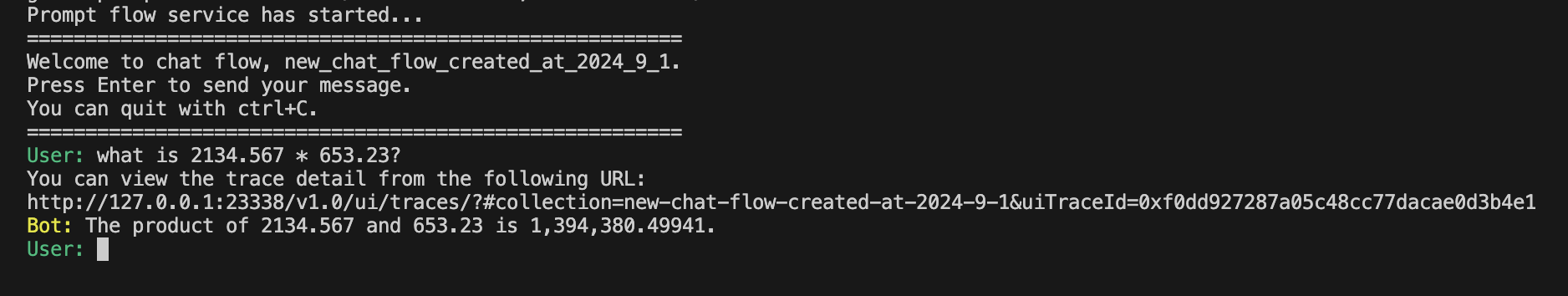

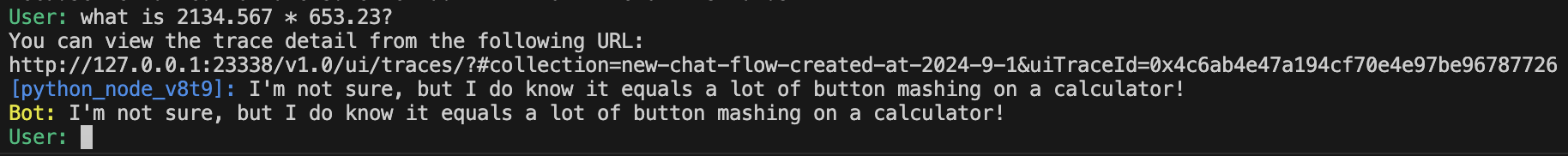

I ran the flow and asked "what is 2134.567 * 653.23?"It provided an answer that was close but incorrect (the correct result is 1,394,363.20141)

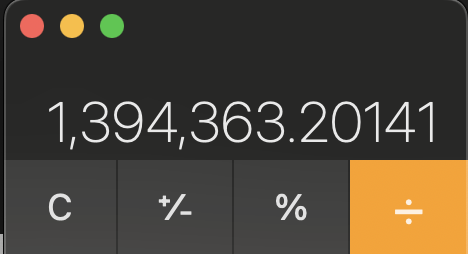

The correct result

I replaced the large language model chat node with a Python node.The answer to every question I ask is "hello "+the question I asked (not very useful)

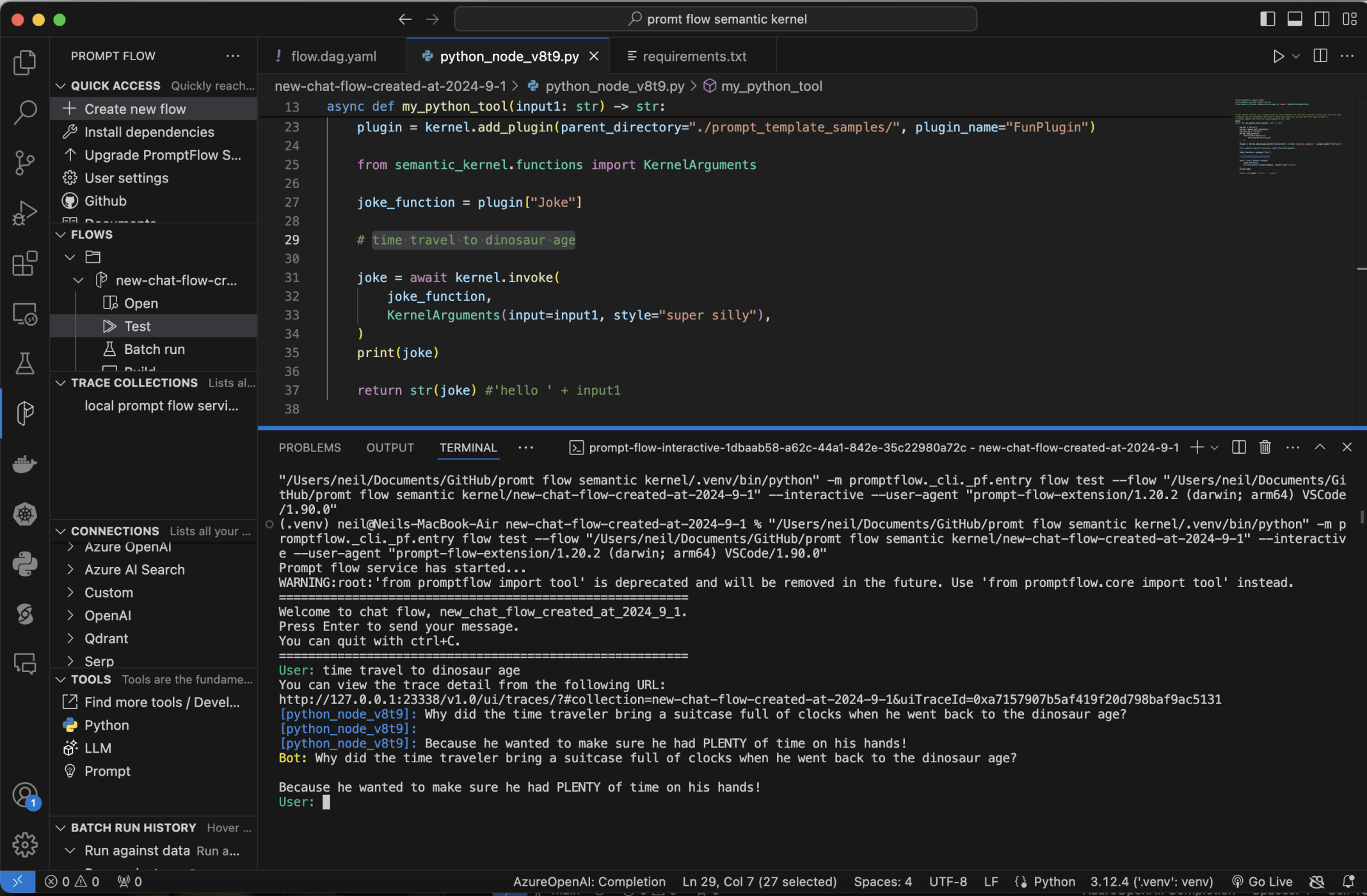

I added Semantic Kernel to the Python codeI added the Joke function from the FunPlugin.

Now the answer to every question I ask is a Joke (not always useful)

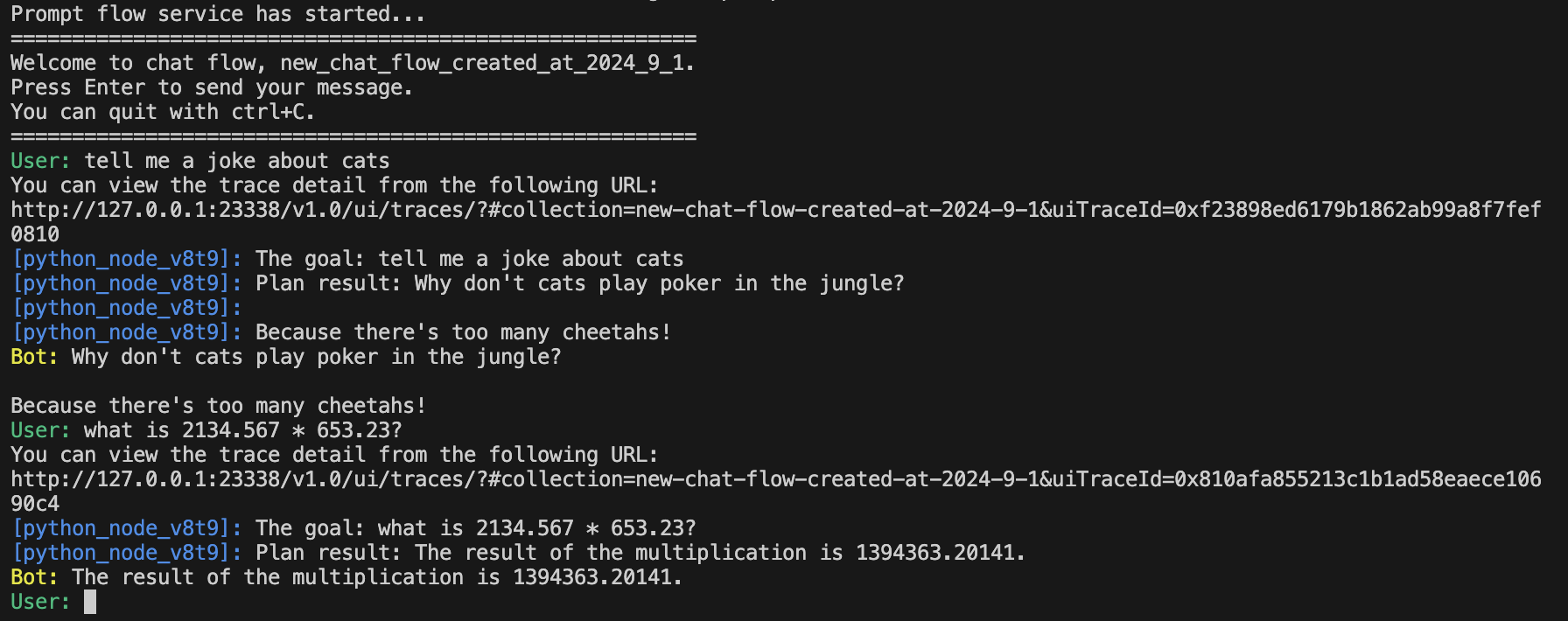

I added the MathPlugin and a PlannerNow I can ask fun questions or math questions and the bot can decide which Plugin to call

python_node_v8t9.py

TEXT

1from promptflow import tool 2from semantic_kernel import Kernel 3from semantic_kernel.connectors.ai.open_ai import OpenAIChatCompletion 4 5@tool 6async def my_python_tool(input1: str) -> str: 7 8 kernel = Kernel() 9 kernel.remove_all_services() 10 service_id = "default" 11 kernel.add_service( 12 OpenAIChatCompletion( 13 service_id=service_id, 14 ), 15 ) 16 plugin = kernel.add_plugin(parent_directory="./prompt_template_samples/", plugin_name="FunPlugin") 17 18 from semantic_kernel.functions import KernelArguments 19 20 joke_function = plugin["Joke"] 21 22 # time travel to dinosaur age 23 24 joke = await kernel.invoke( 25 joke_function, 26 KernelArguments(input=input1, style="super silly"), 27 ) 28 print(joke) 29 30 return str(joke)

python_node_v8t9.py updated

TEXT

1# from promptflow import tool 2from promptflow.core import tool 3from semantic_kernel import Kernel 4from semantic_kernel.connectors.ai.open_ai import OpenAIChatCompletion 5 6@tool 7async def my_python_tool(input1: str) -> str: 8 9 kernel = Kernel() 10 kernel.remove_all_services() 11 service_id = "default" 12 kernel.add_service( 13 OpenAIChatCompletion( 14 service_id=service_id, 15 ), 16 ) 17 18 fun_plugin = kernel.add_plugin(parent_directory="./prompt_template_samples/", plugin_name="FunPlugin") 19 math_plugin = kernel.add_plugin(parent_directory="./plugins", plugin_name="MathPlugin") 20 21 from semantic_kernel.planners.function_calling_stepwise_planner import ( 22 FunctionCallingStepwisePlanner, 23 FunctionCallingStepwisePlannerOptions, 24 ) 25 26 planner = FunctionCallingStepwisePlanner(service_id="default") 27 28 # goal = "Figure out how much I have if first, my investment of 2130.23 dollars increased by 23%, and then I spend $5 on a coffee" # noqa: E501 29 # goal = "what is 2134.567 * 653.23?" 30 # goal = "tell me a limeric about cats" 31 goal = input1 32 33 # Execute the plan 34 result = await planner.invoke(kernel=kernel, question=goal) 35 36 print(f"The goal: {goal}") 37 print(f"Plan result: {result.final_answer}") 38 39 return result.final_answer