Prompt flow is a development tool designed to streamline the development of AI applications powered by Large Language Models (LLMs).

Prompt flow applications can be developed using Azure or locally on a laptop.

Prompt flow application can be deployed using Azure App Service, Docker or Kubernetes.

Here I demonstrate how I used Prompt flow for Visual Studio Code to create and run a couple of sample AI applications.

The result is not very different from AI applications I have developed in the past using langchain.

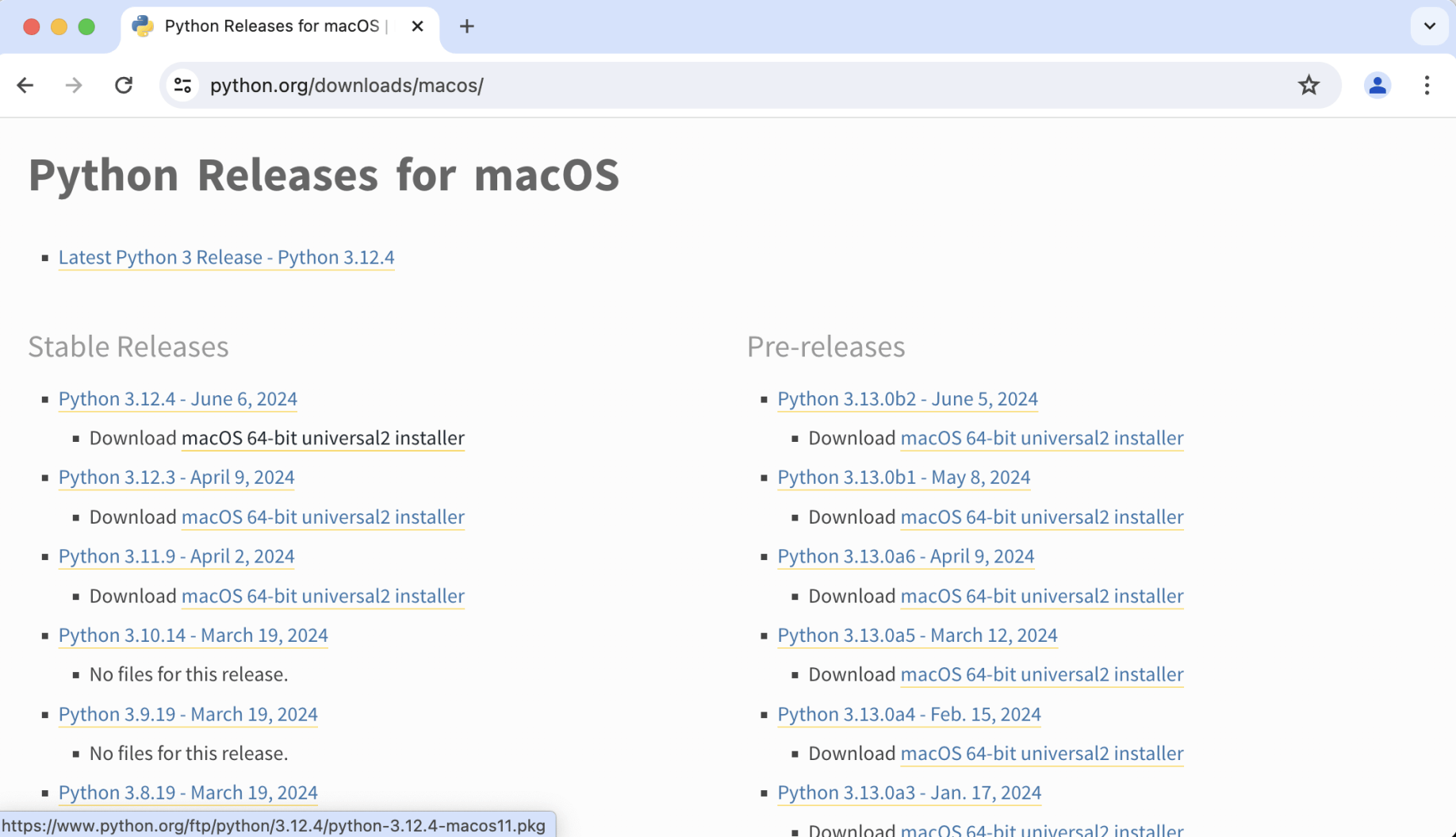

I downloaded Python from the python.org web site

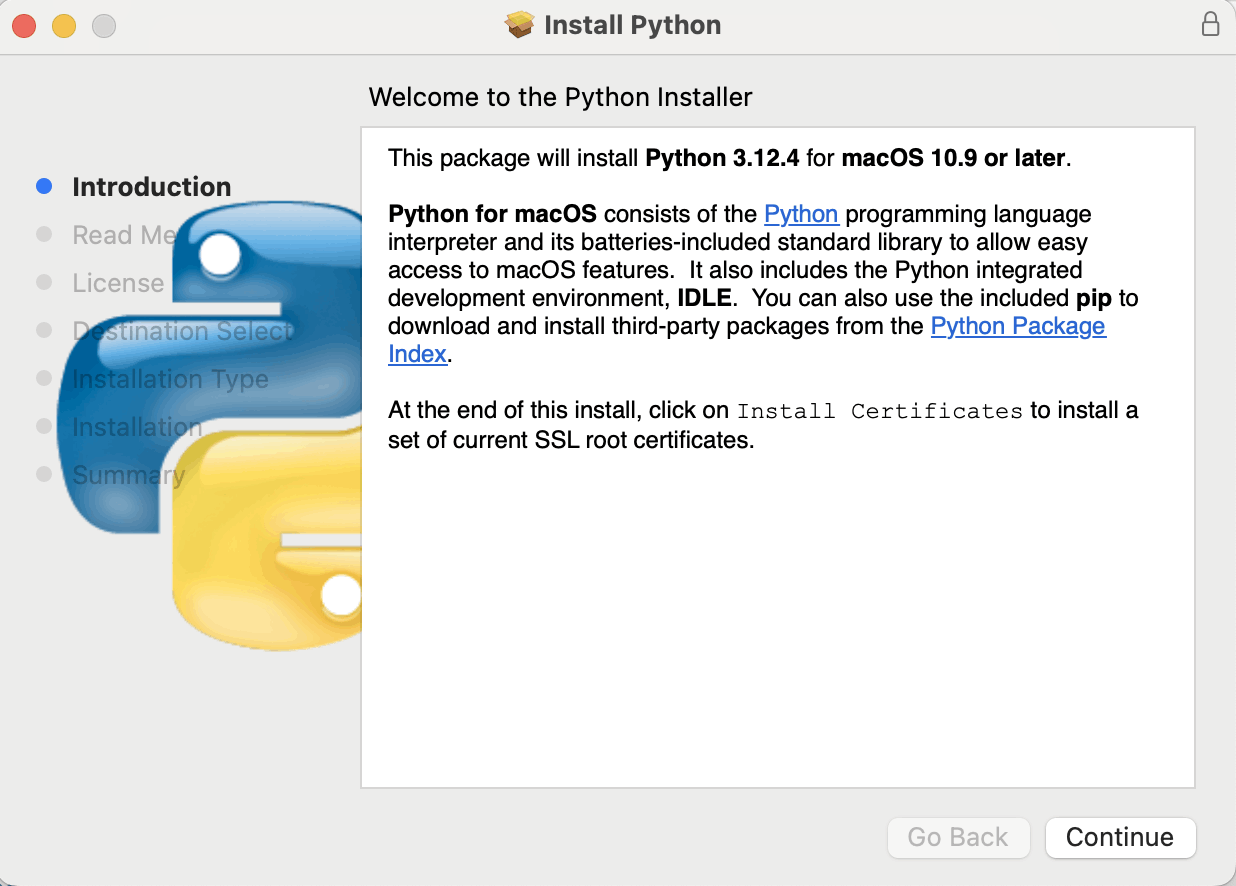

I installed Python 3.12.4 for macOS

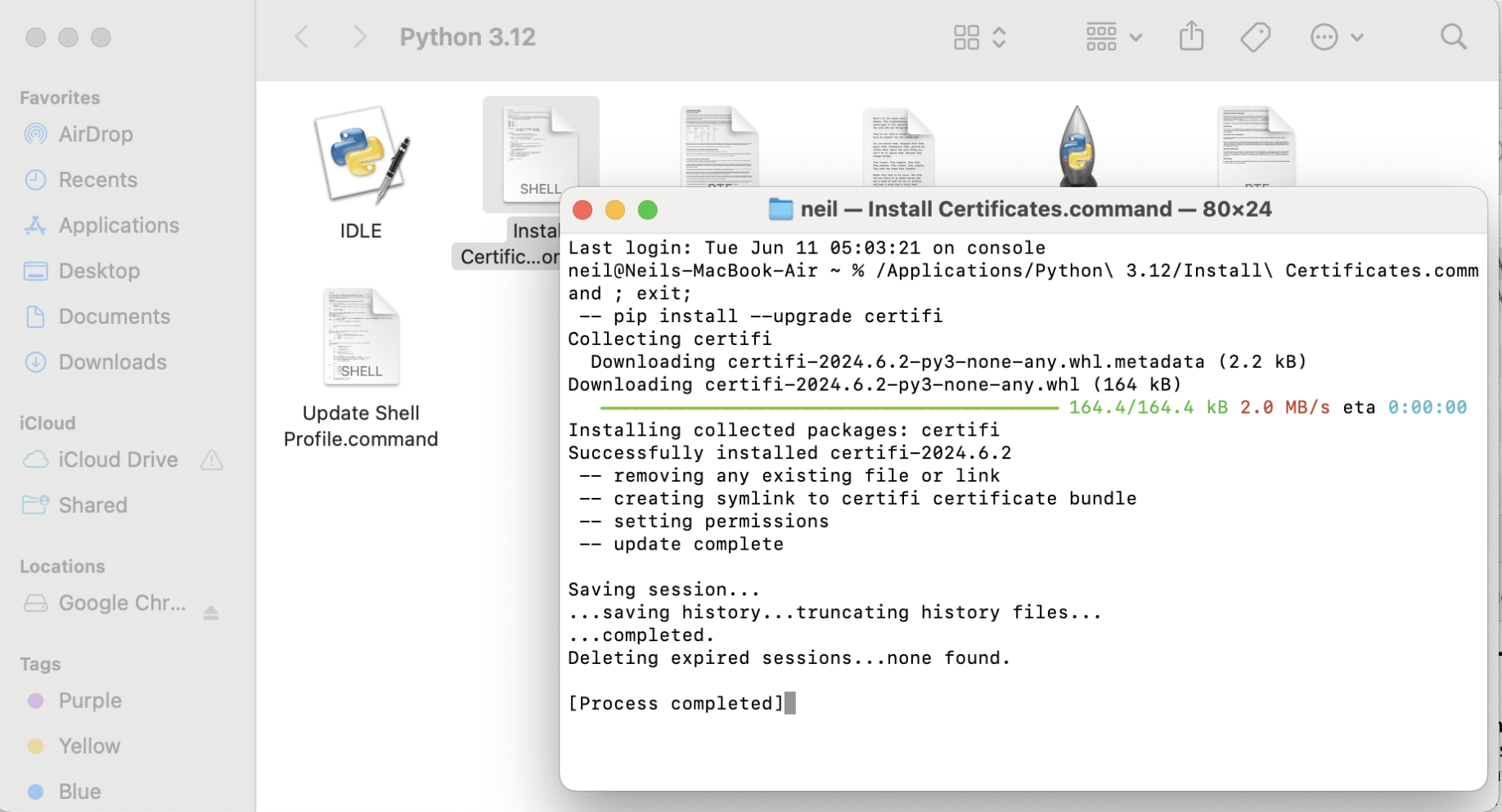

I ran the Install Certificates script

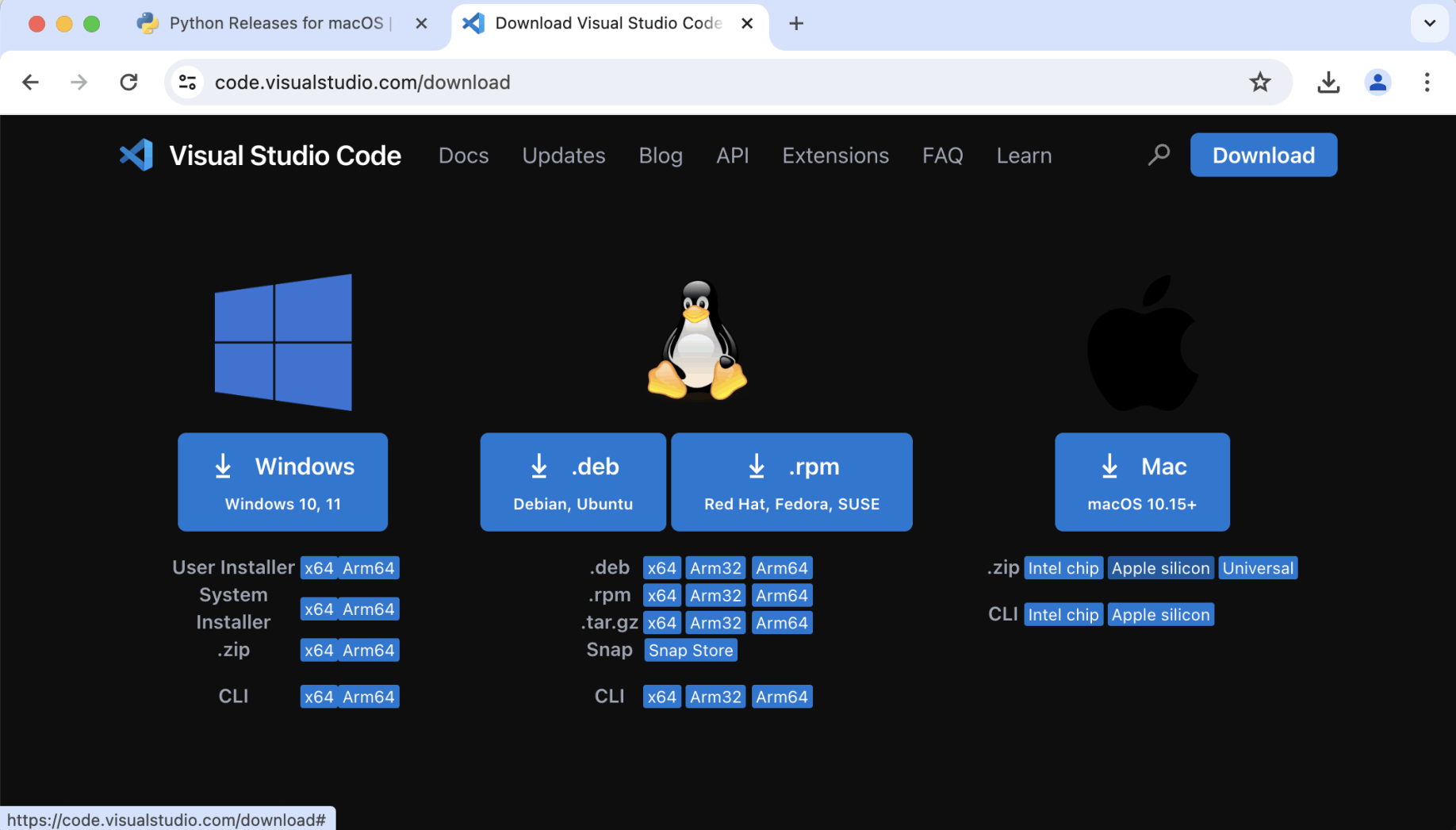

I downloaded Visual Studio Code (the Apple silicon build)

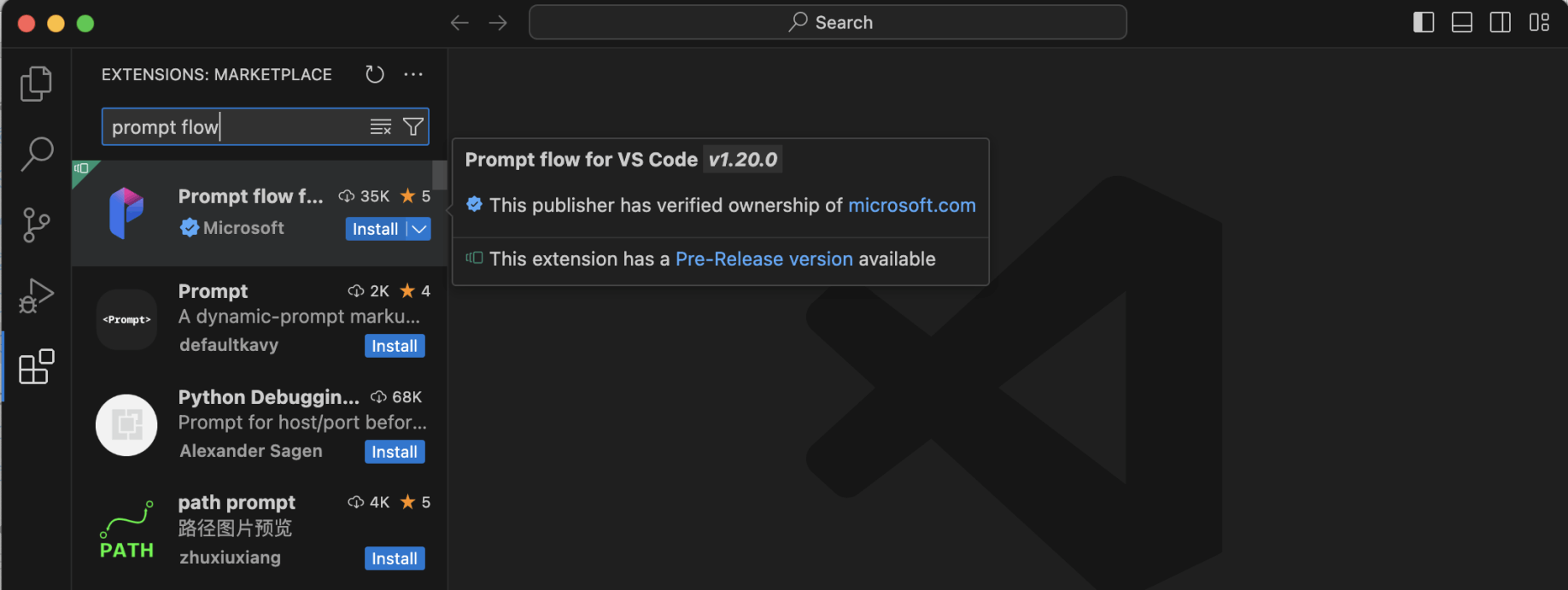

I installed the Prompt flow VS Code extension

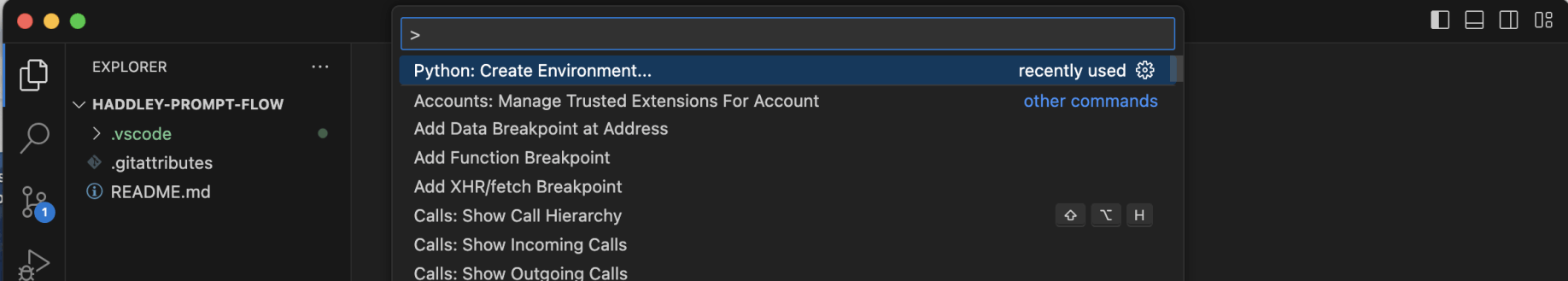

I opened a folder and ran the Python: Create Environment command.

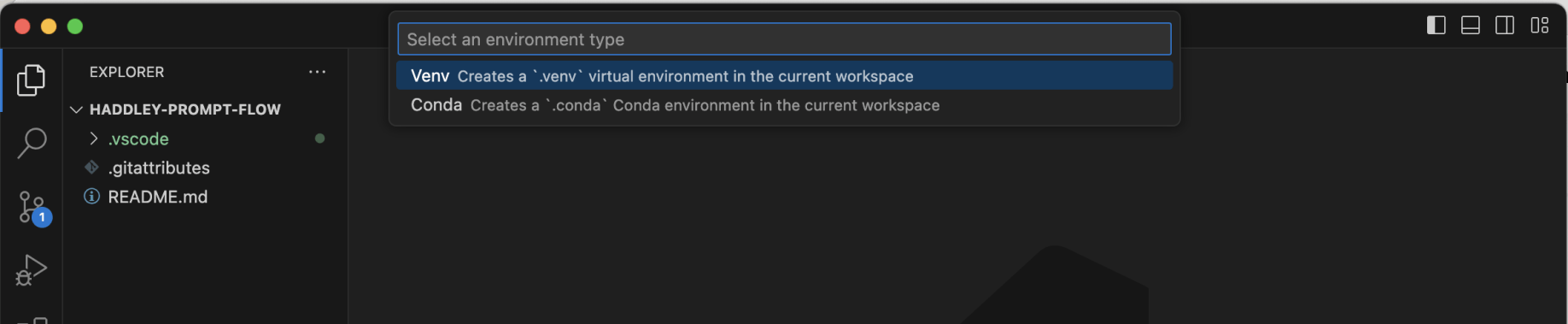

I created a .venv environment in the project's folder

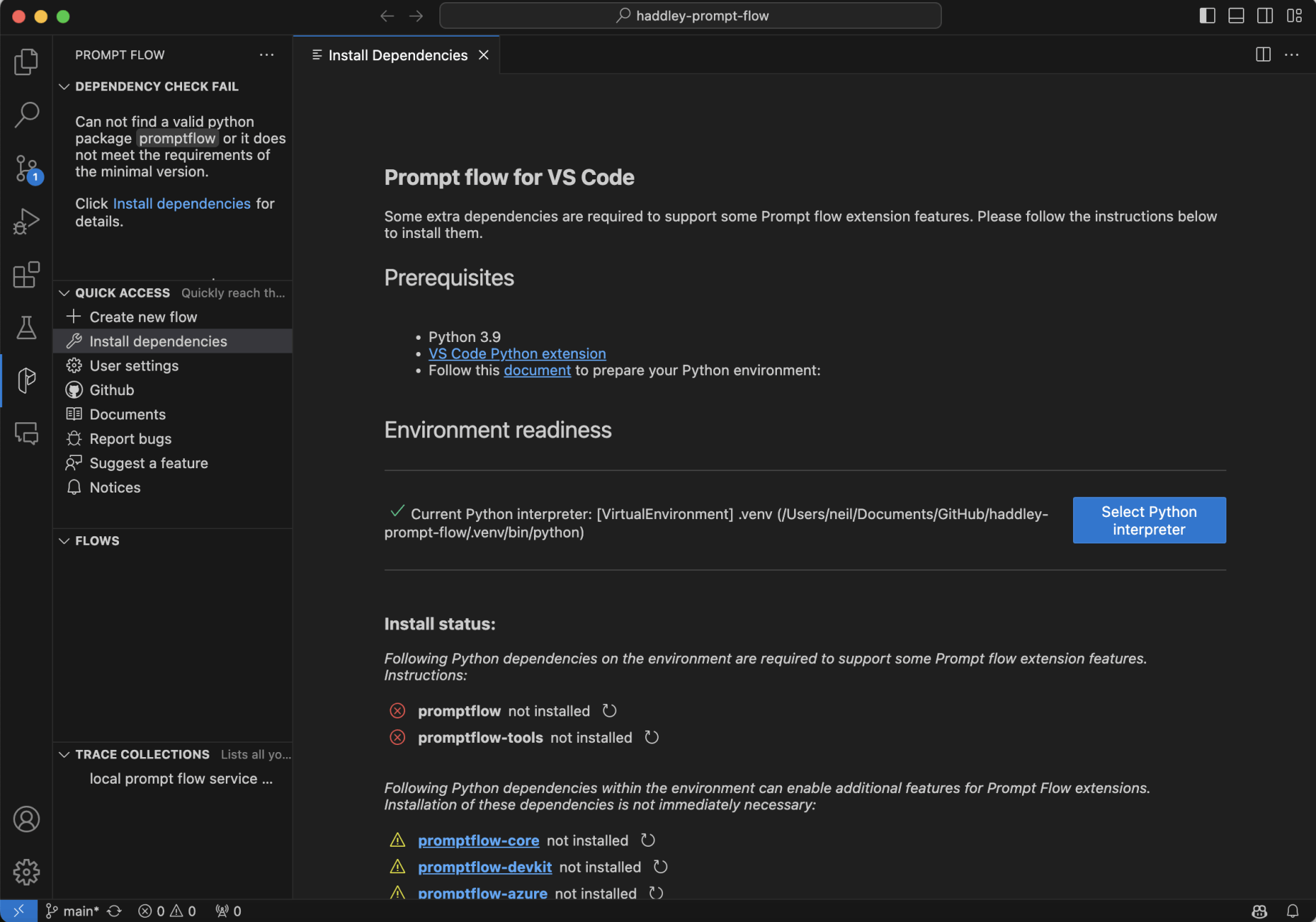

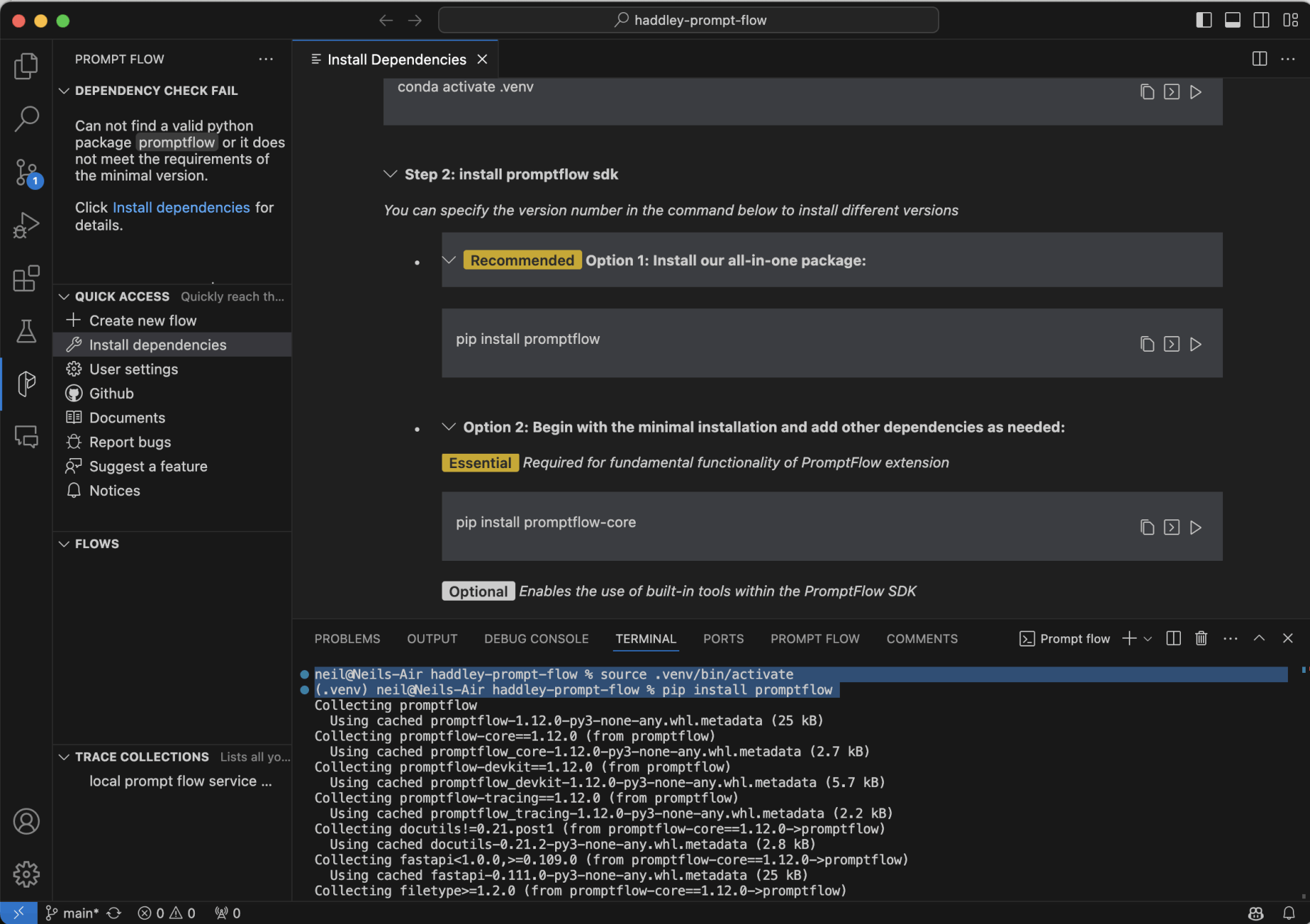

I selected the Prompt flow extension (the "P" logo) and clicked the QUICK ACCESS | Install dependencies menu item

I used source .venv/bin/activate to activate the environment

I used pip install promptflow to add the promptflow dependencies

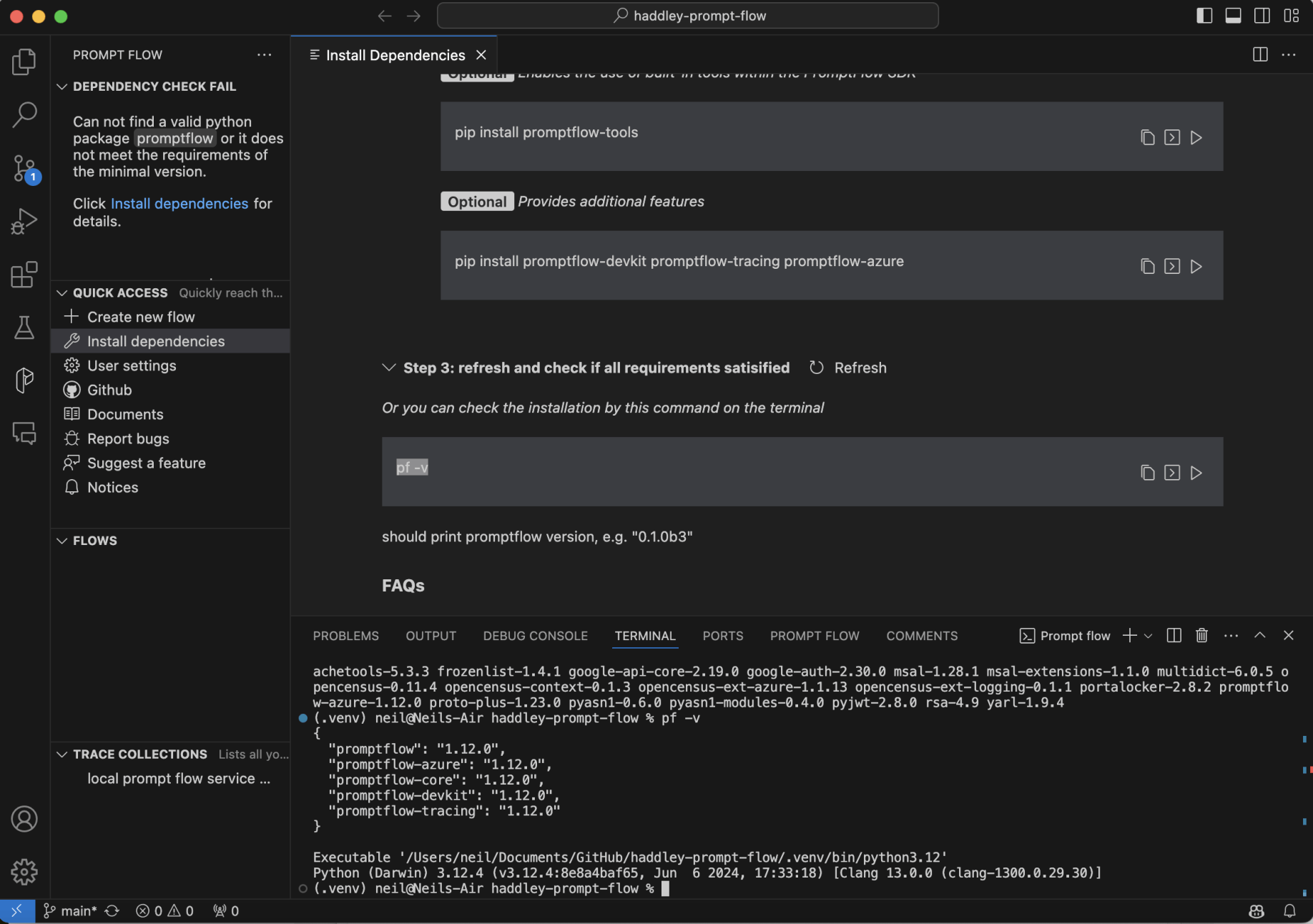

I ran pf -v to see what version of Prompt flow was installed

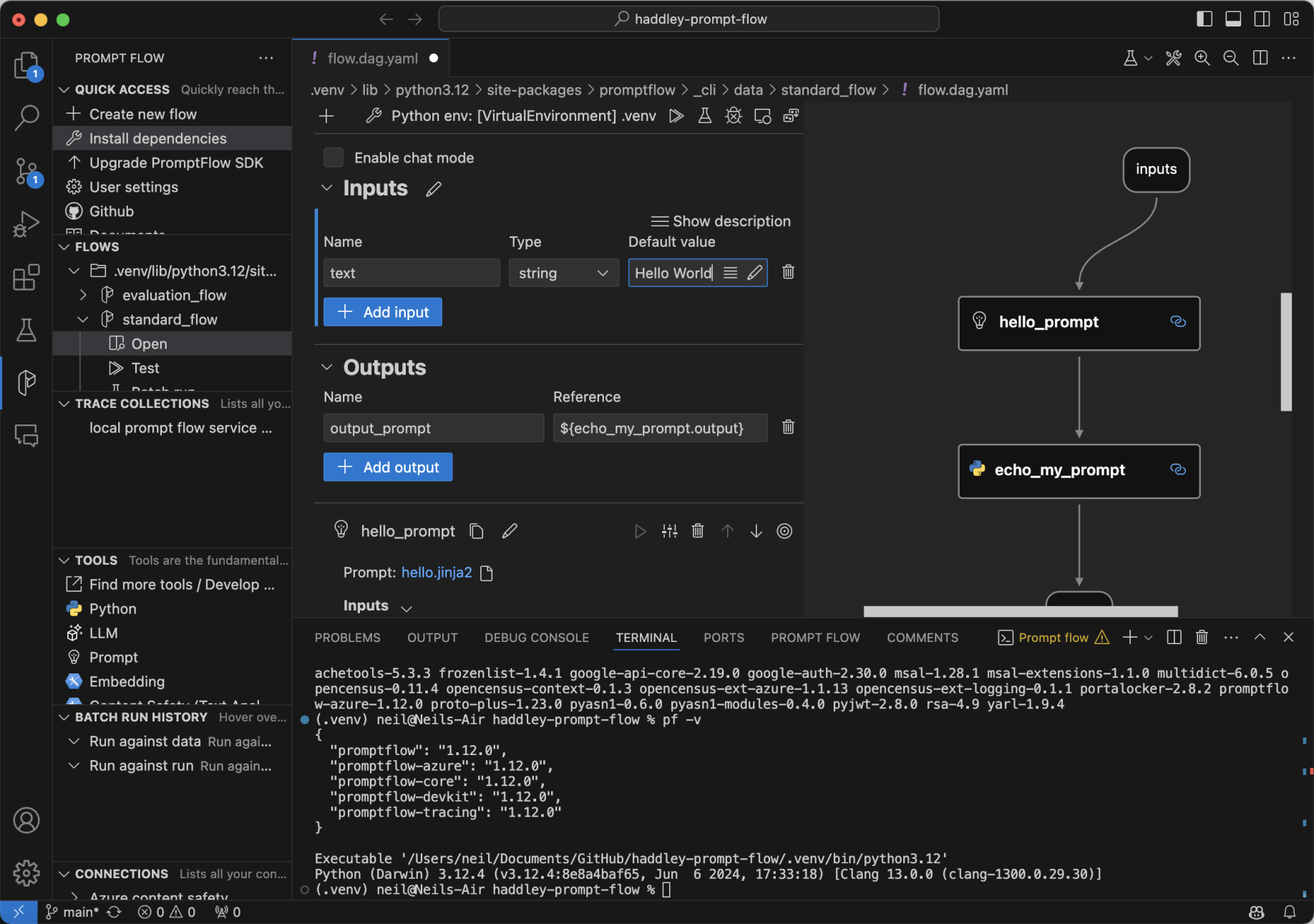

I clicked on FLOWS and navigated to the included standard_flow

I ensured that the .venv virtual environment was selected and entered a text default value "Hello World"

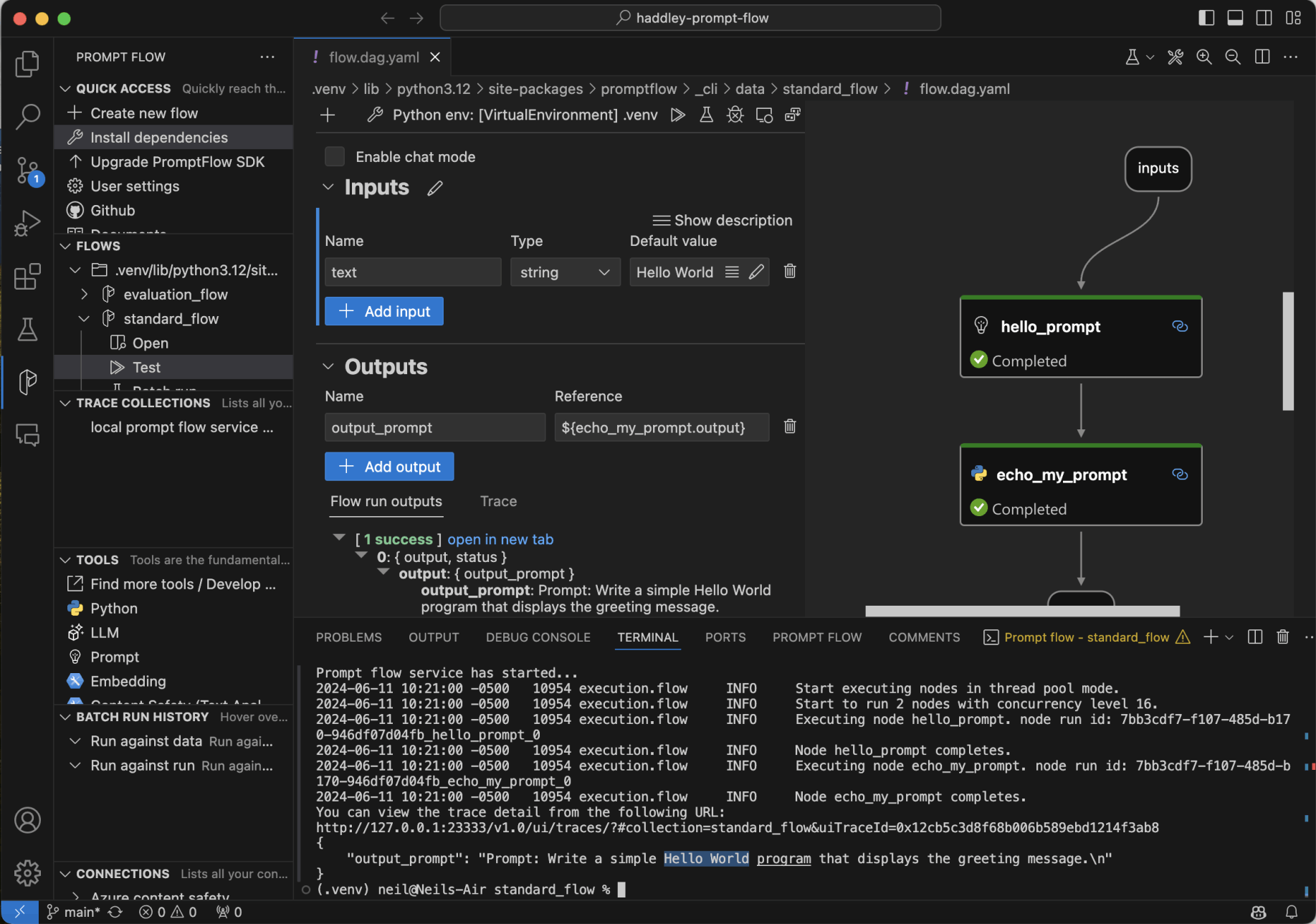

I clicked the Test menu item to run the standard_flow

The default text value was added to the "Prompt:" and "Write a simple {{text}} program that displays the greeting message." text

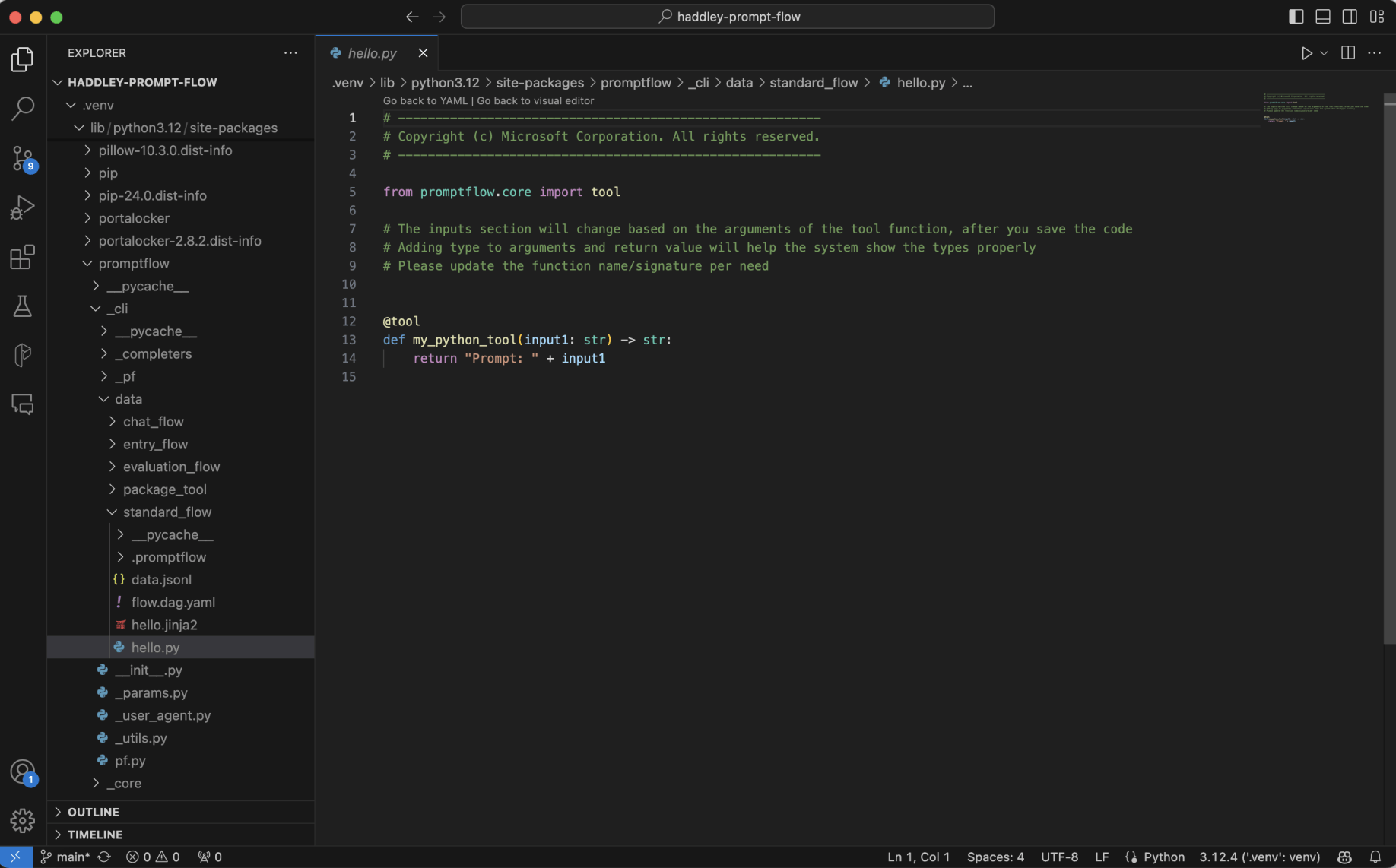

The "Prompt: " text is included in the hello.py source code

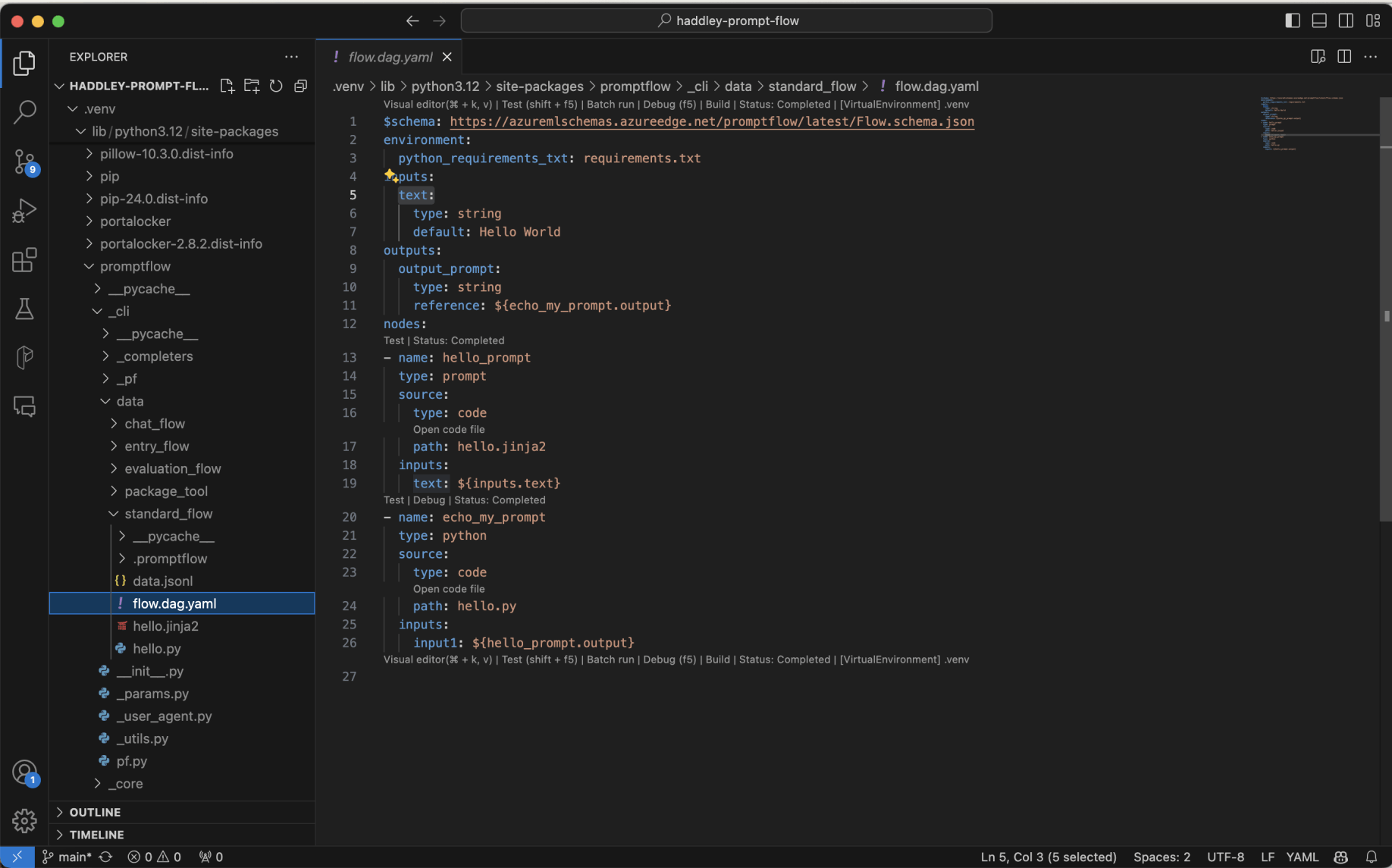

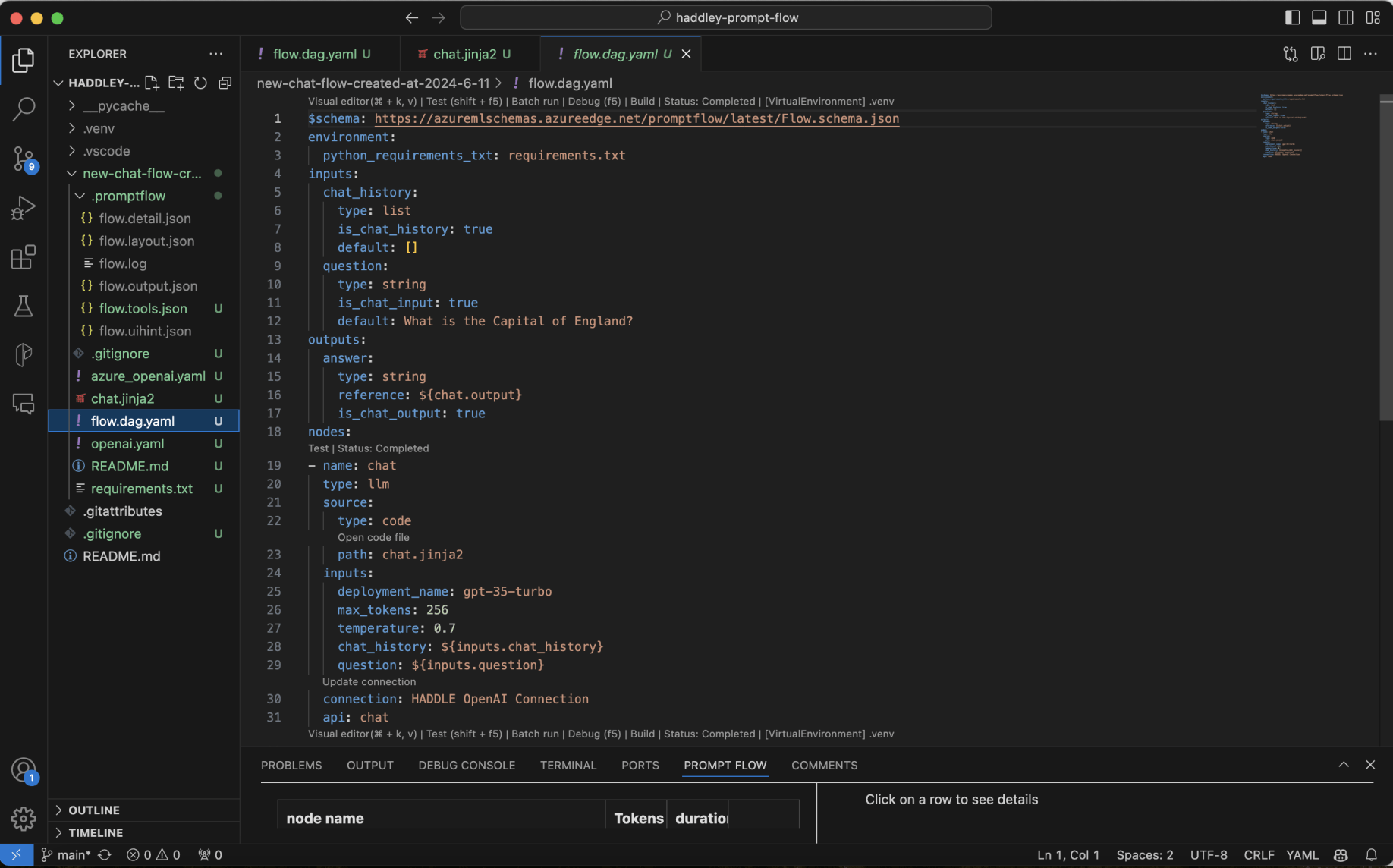

The flow.dag.yaml file describes the flow

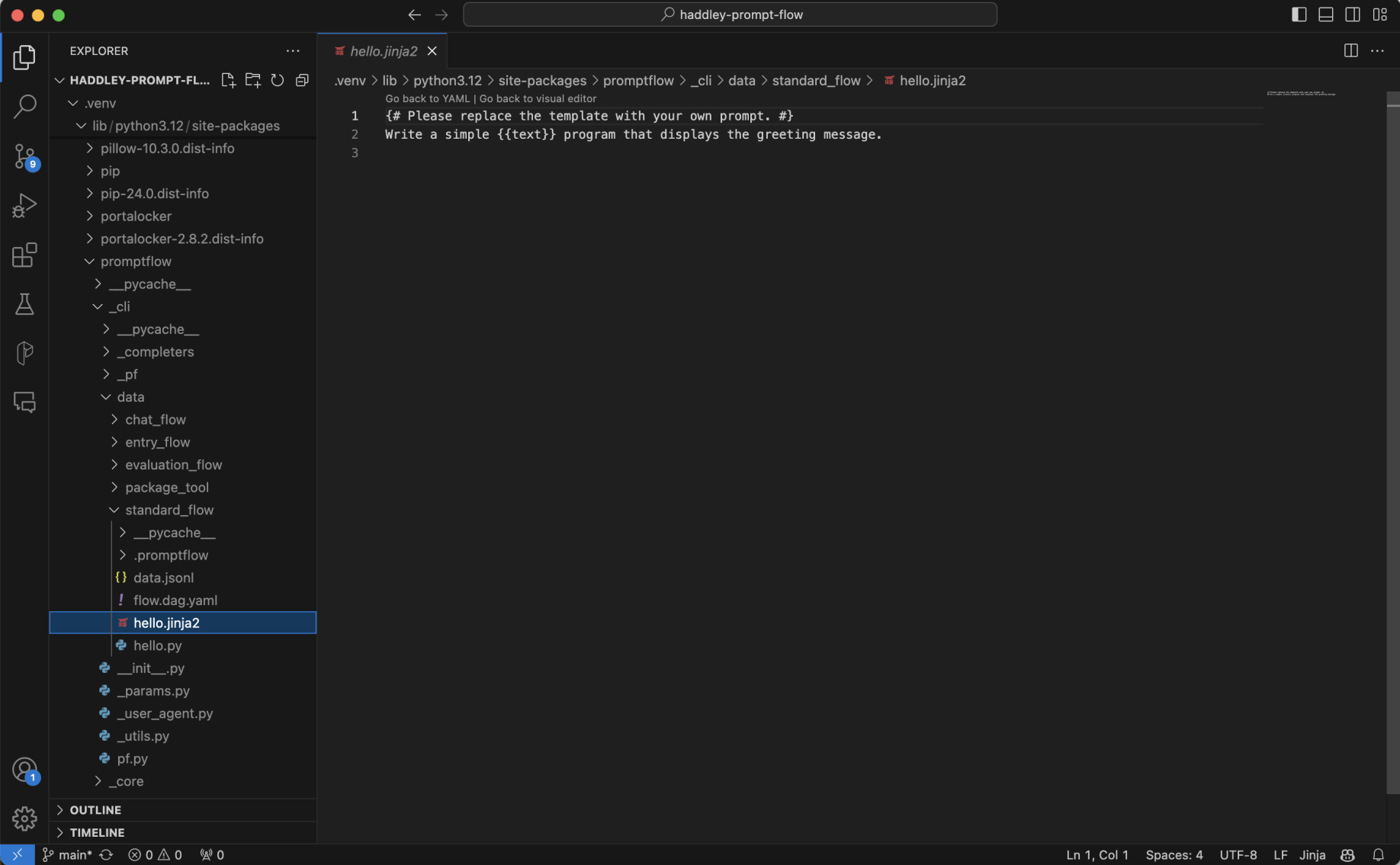

The hello.jinja2 template contains the "Write a simple {{text}}..." text

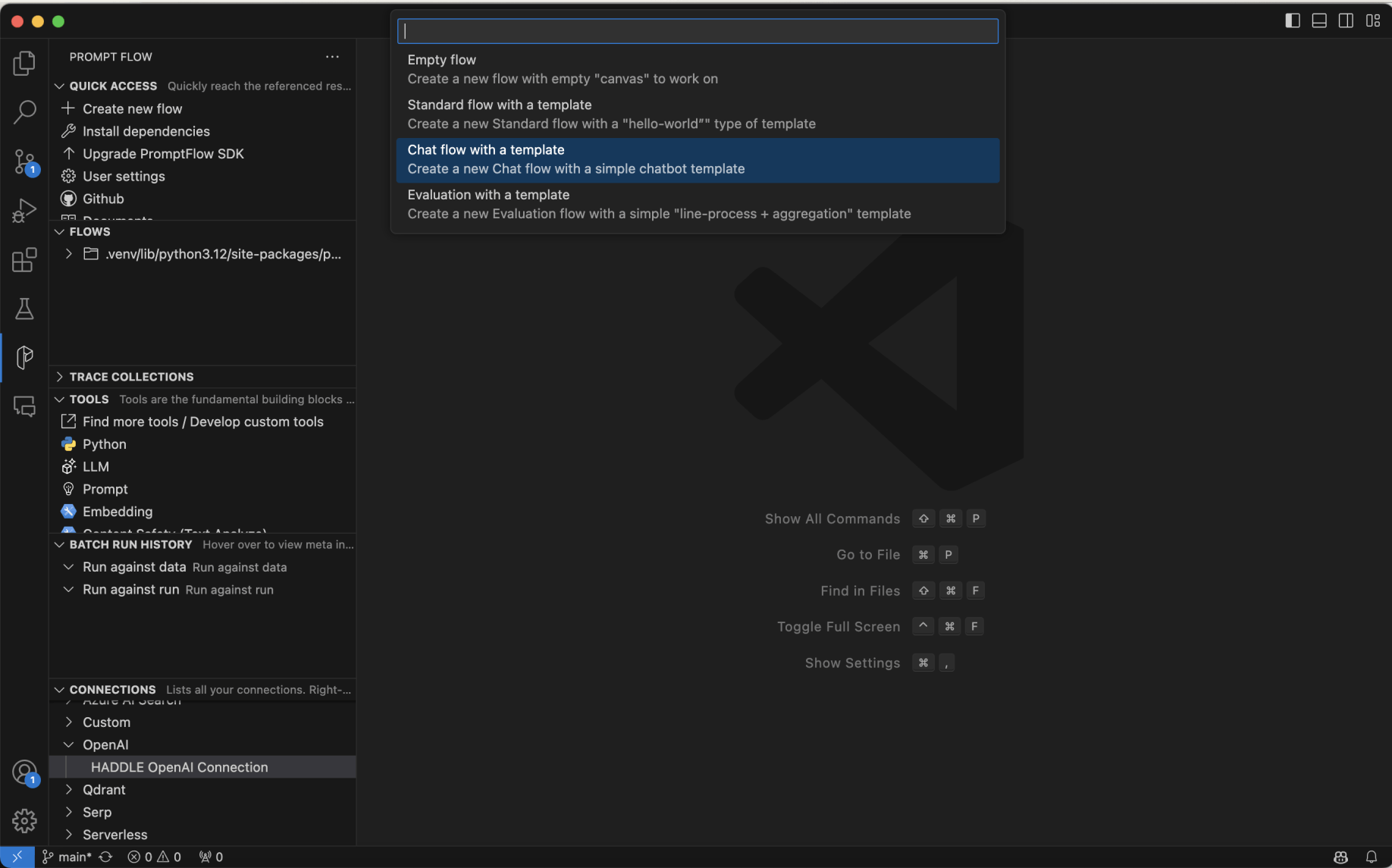

I used the +Create new flow menu item to create a new flow.

I used the Open menu item to view the flow.

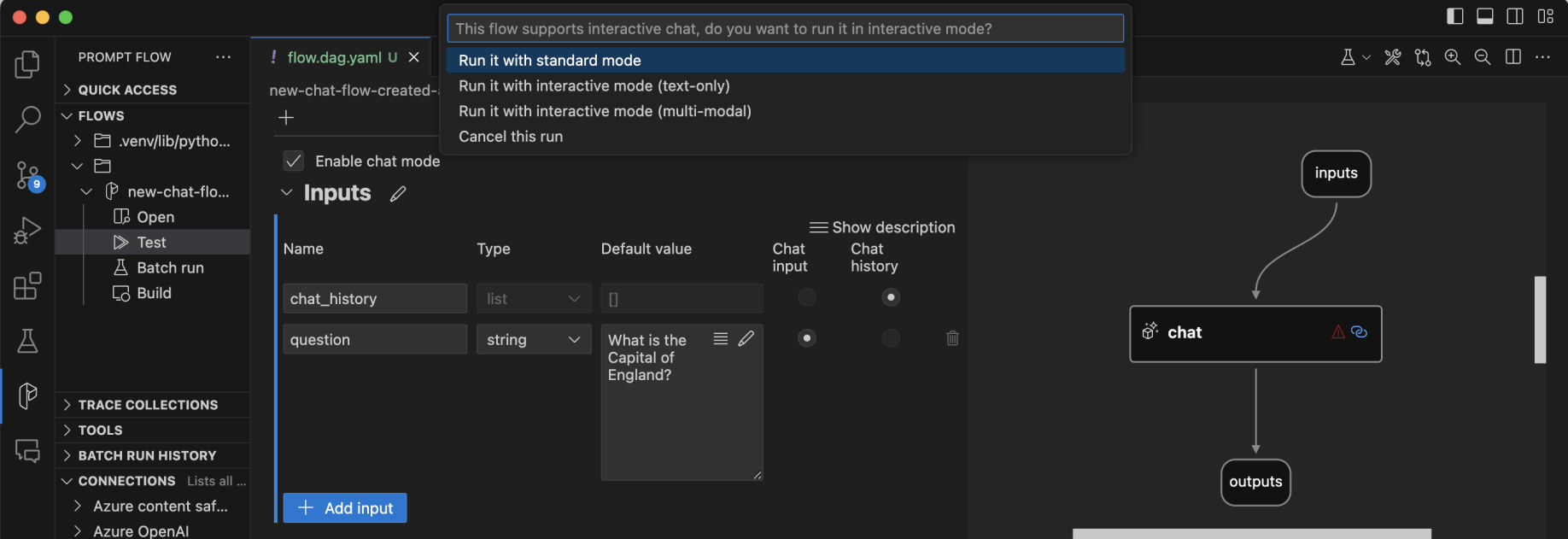

I used the Test menu item to run the flow.

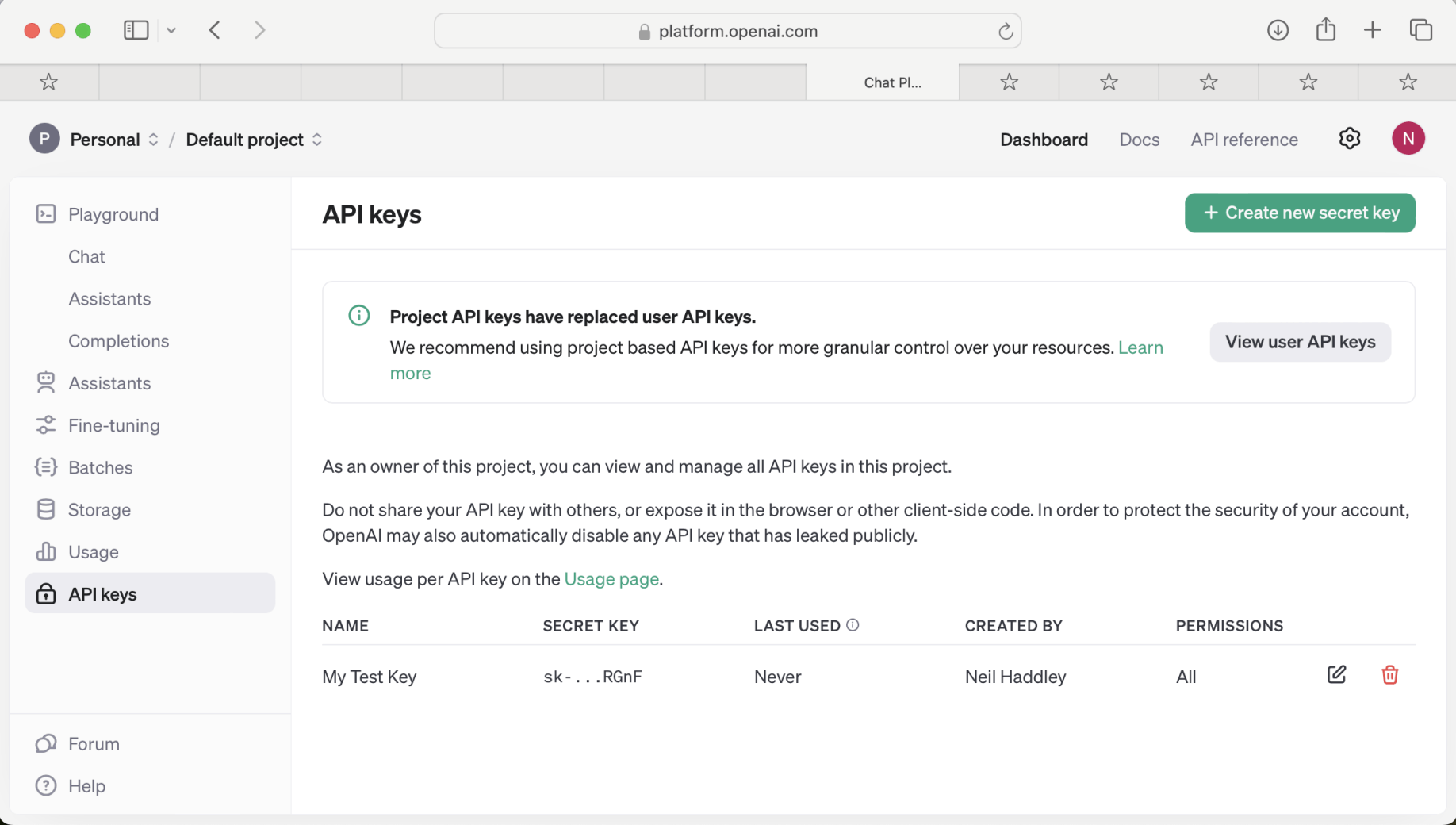

I generated a new Open AI key and used it to create a Prompt flow connection

I created a chat flow

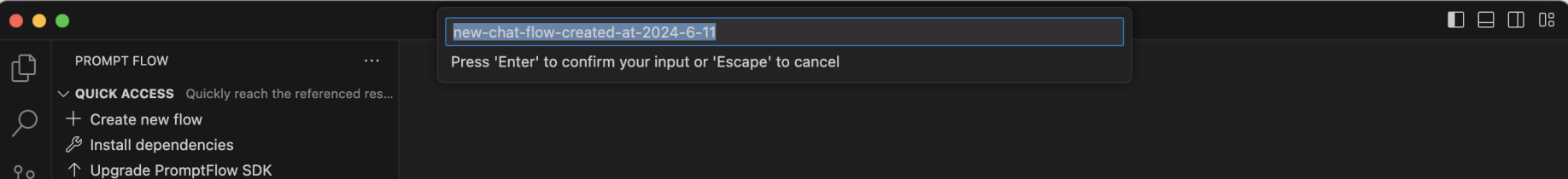

I accepted the default flow name

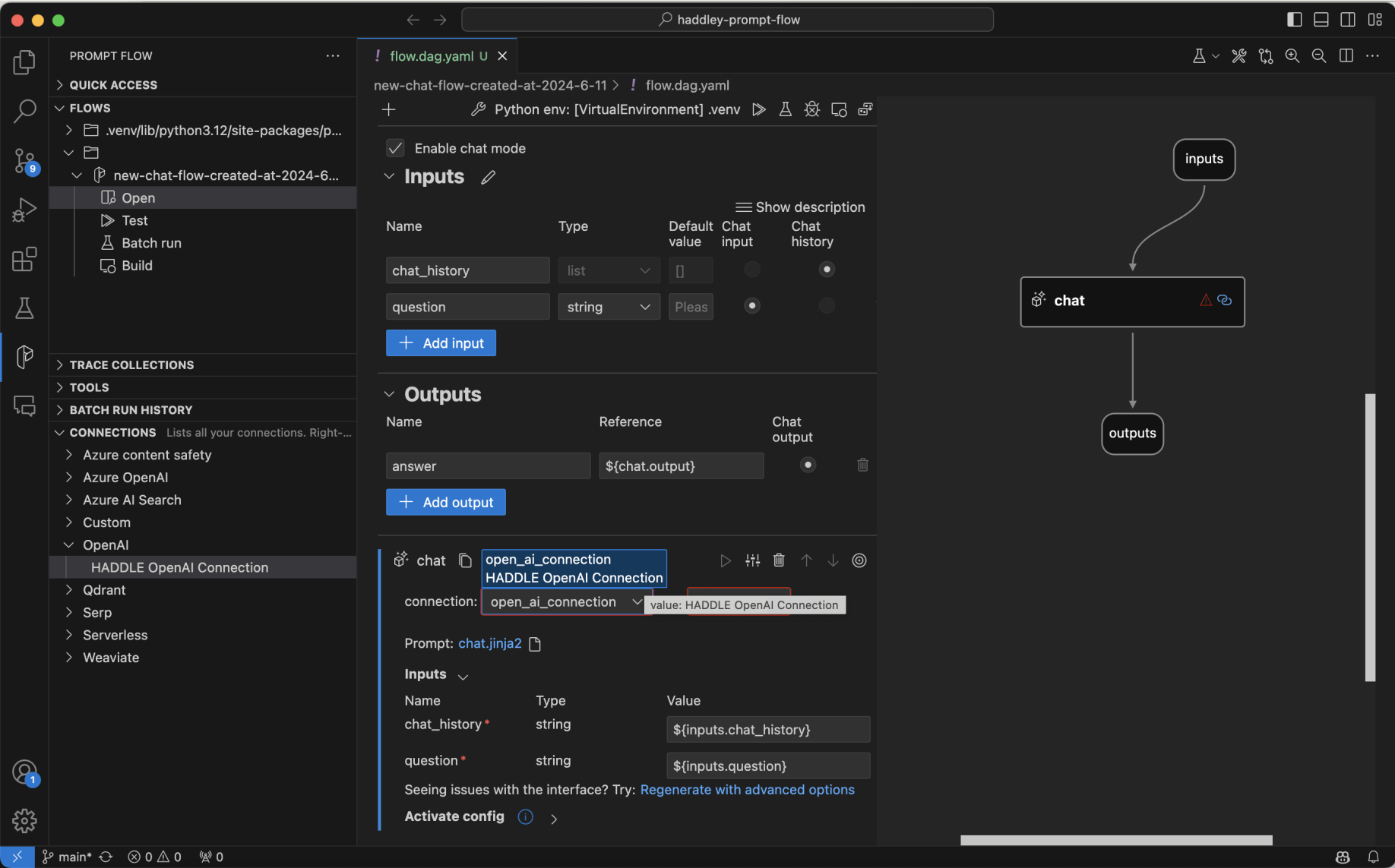

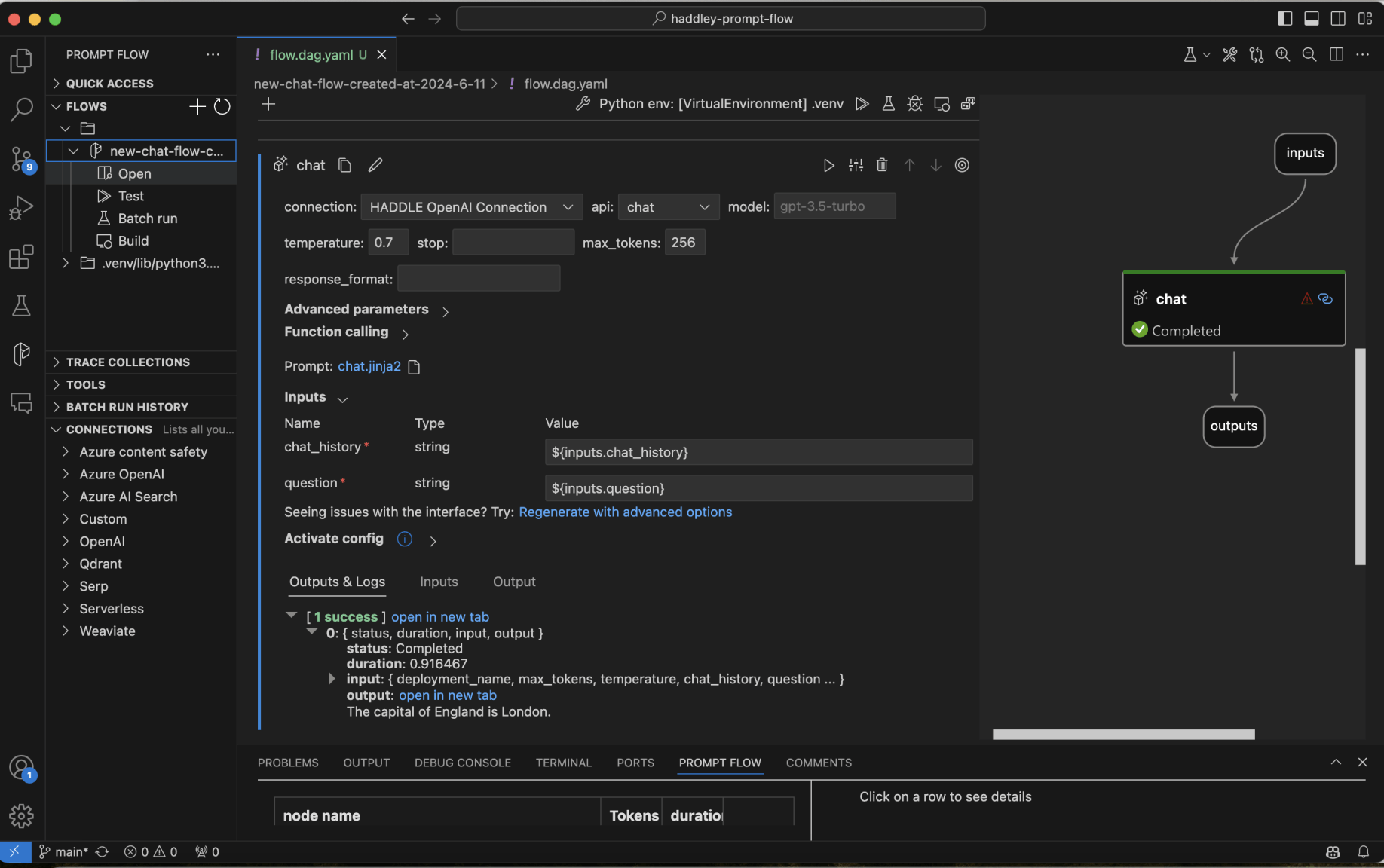

I updated the flow to make use of the OpenAI (large language model) connection

I updated the default question text

I used the Test menu item to run the flow with standard mode

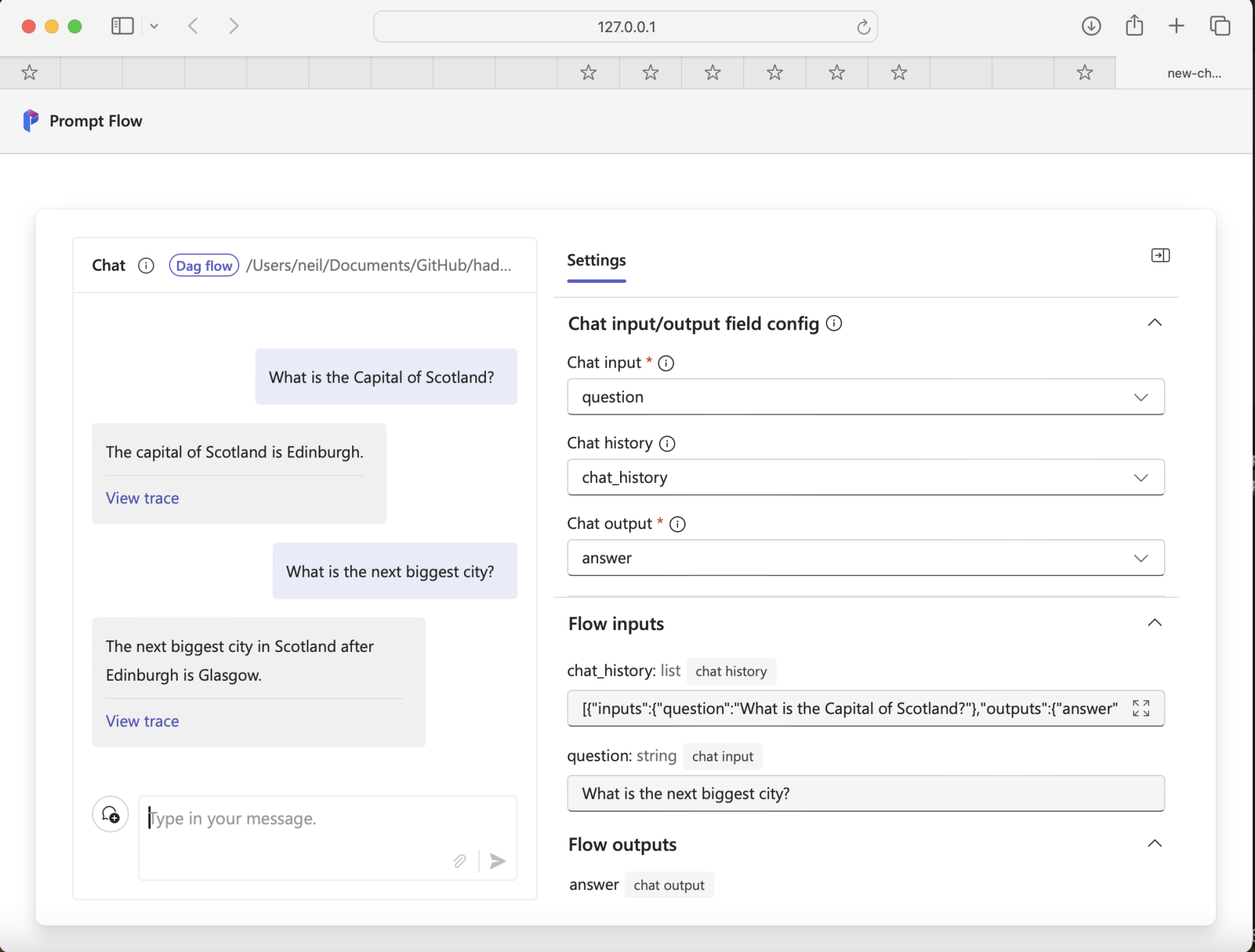

I ran the Prompt flow with interactive mode (multi-modal)

The new chat flow included a single llm tool (named "chat")

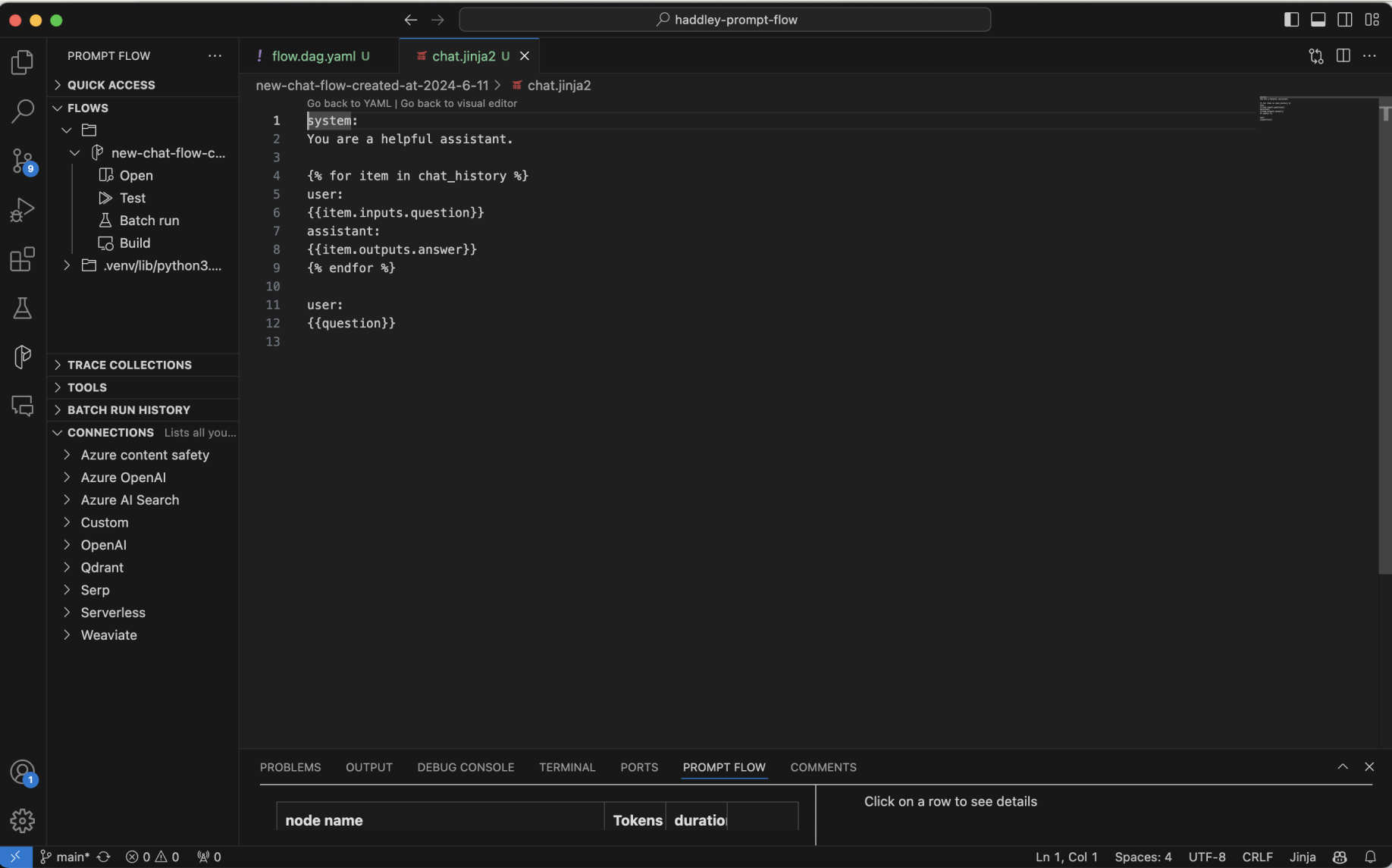

The chat.jinja2 file is used to compose the large language model prompt (with the chat history included)

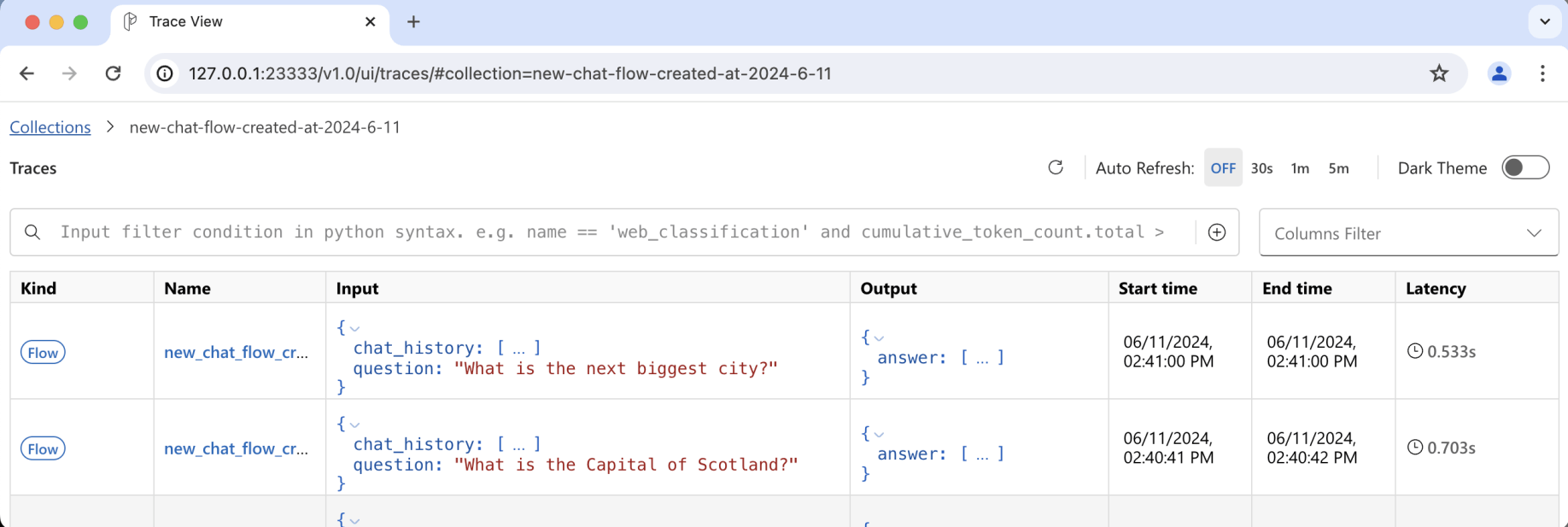

Prompt flow traces

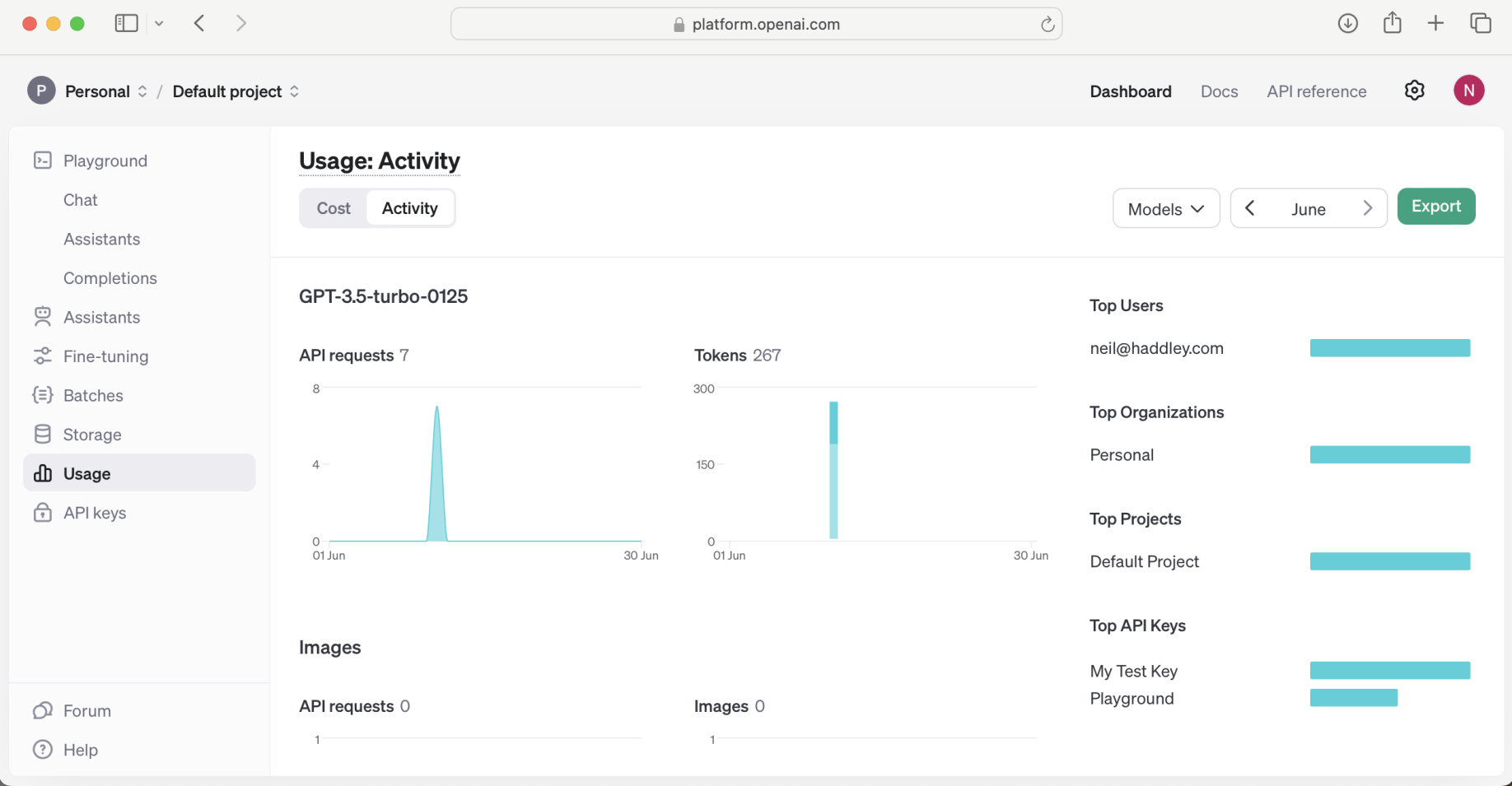

Open AI activity

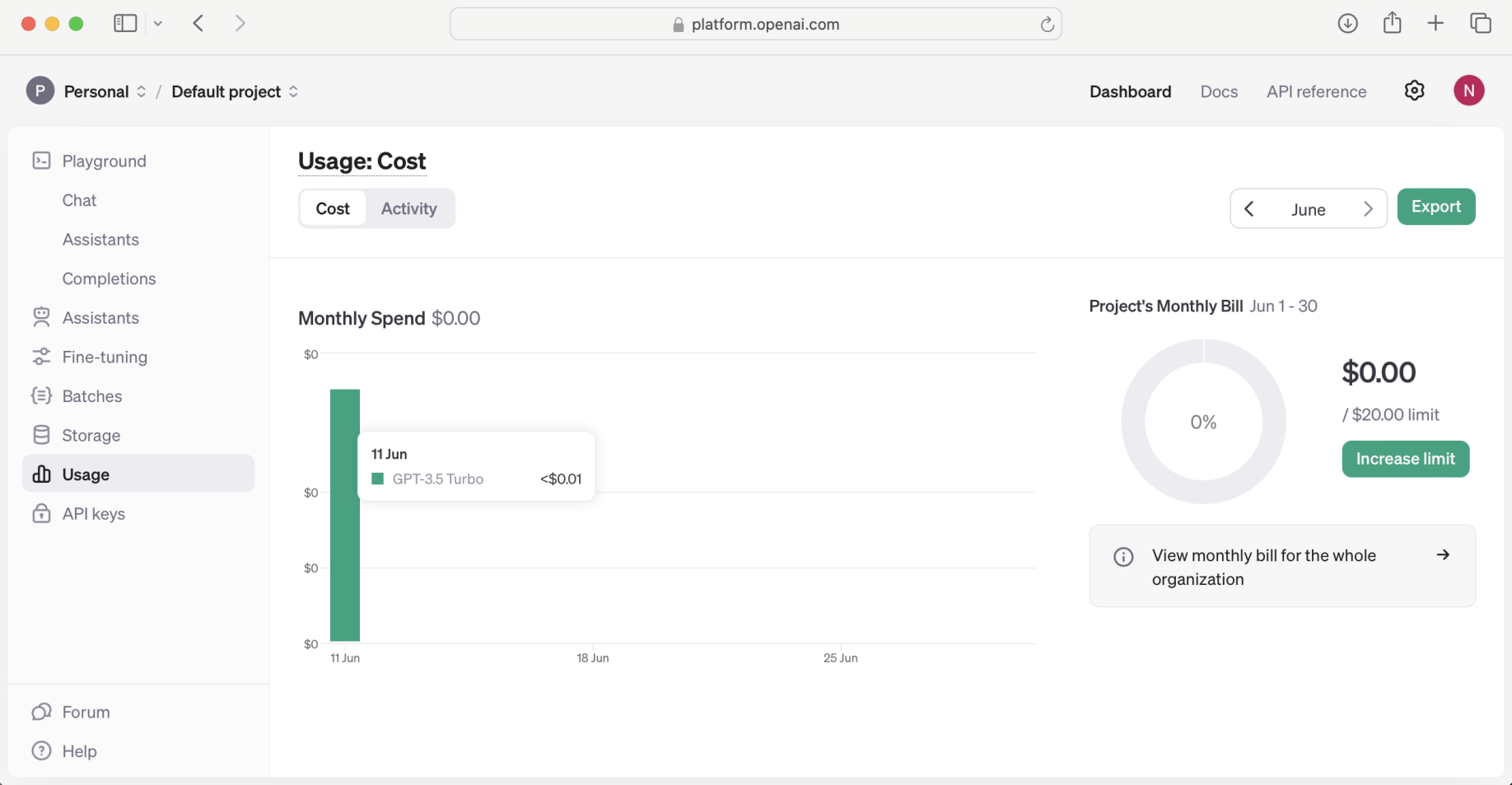

Open AI cost $